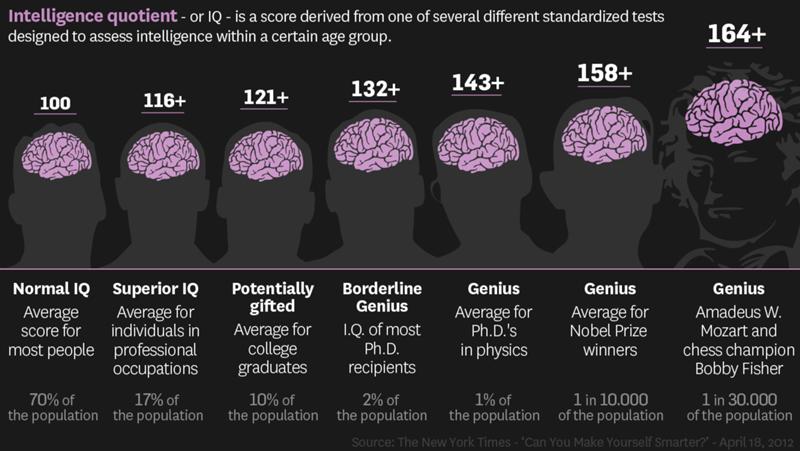

Psychological iq tests

Intelligent intelligence testing

Standardized intelligence testing has been called one of psychology's greatest successes. It is certainly one of the field's most persistent and widely used inventions.

Since Alfred Binet first used a standardized test to identify learning-impaired Parisian children in the early 1900s, it has become one of the primary tools for identifying children with mental retardation and learning disabilities. It has helped the U.S. military place its new recruits in positions that suit their skills and abilities. And, since the administration of the original Scholastic Aptitude Test (SAT)--adapted in 1926 from an intelligence test developed for the U.S. Army during World War I--it has spawned a variety of aptitude and achievement tests that shape the educational choices of millions of students each year.

But intelligence testing has also been accused of unfairly stratifying test-takers by race, gender, class and culture; of minimizing the importance of creativity, character and practical know-how; and of propagating the idea that people are born with an unchangeable endowment of intellectual potential that determines their success in life.

Since the 1970s, intelligence researchers have been trying to preserve the usefulness of intelligence tests while addressing those concerns. They have done so in a number of ways, including updating the Wechsler Intelligence Scale for Children (WISC) and the Stanford-Binet Intelligence Scale so they better reflect the abilities of test-takers from diverse cultural and linguistic backgrounds. They have developed new, more sophisticated ways of creating, administering and interpreting those tests. And they have produced new theories and tests that broaden the concept of intelligence beyond its traditional boundaries.

As a result, many of the biases identified by critics of intelligence testing have been reduced, and new tests are available that, unlike traditional intelligence tests, are based on modern theories of brain function, says Alan Kaufman, PhD, a clinical professor of psychology at the Yale School of Medicine.

For example, in the early 1980s, Kaufman and his wife, Nadeen Kaufman, EdD, a lecturer at the Yale School of Medicine, published the Kaufman Assessment Battery for Children (K-ABC), then one of the only alternatives to the WISC and the Stanford-Binet. Together with the Woodcock-Johnson Tests of Cognitive Ability, first published in the late 1970s, and later tests, such as the Differential Ability Scales and the Cognitive Assessment System (CAS), the K-ABC helped expand the field of intelligence testing beyond the traditional tests.

Together with the Woodcock-Johnson Tests of Cognitive Ability, first published in the late 1970s, and later tests, such as the Differential Ability Scales and the Cognitive Assessment System (CAS), the K-ABC helped expand the field of intelligence testing beyond the traditional tests.

Nonetheless, says Kaufman, there remains a major gap between the theories and tests that have been developed in the past 20 years and the way intelligence tests are actually used. Narrowing that gap remains a major challenge for intelligence researchers as the field approaches its 100th anniversary.

King of the hill

Among intelligence tests for children, one test currently dominates the field: the WISC-III, the third revision of psychologist David Wechsler's classic 1949 test for children, which was modeled after Army intelligence tests developed during World War I.

Since the 1970s, says Kaufman, "the field has advanced in terms of incorporating new, more sophisticated methods of interpretation, and it has very much advanced in terms of statistics and methodological sophistication in development and construction of tests. But the field of practice has lagged woefully behind."

But the field of practice has lagged woefully behind."

Nonetheless, people are itching for change, says Jack Naglieri, PhD, a psychologist at George Mason University who has spent the past two decades developing the CAS in collaboration with University of Alberta psychologist J.P. Das, PhD. Practitioners want tests that can help them design interventions that will actually improve children's learning; that can distinguish between children with different conditions, such as a learning disability or attention deficit disorder; and that will accurately measure the abilities of children from different linguistic and cultural backgrounds.

Naglieri's own test, the CAS, is based on the theories of Soviet neuropsychologist A.R. Luria, as is Kaufman's K-ABC. Unlike traditional intelligence tests, says Naglieri, the CAS helps teachers choose interventions for children with learning problems, identifies children with learning disabilities and attention deficit disorder and fairly assesses children from diverse backgrounds. Now, he says, the challenge is to convince people to give up the traditional scales, such as the WISC, with which they are most comfortable.

Now, he says, the challenge is to convince people to give up the traditional scales, such as the WISC, with which they are most comfortable.

According to Nadeen Kaufman, that might not be easy to do. She believes that the practice of intelligence testing is divided between those with a neuropsychological bent, who have little interest in the subtleties of new quantitative tests, and those with an educational bent, who are increasingly shifting their interest away from intelligence and toward achievement. Neither group, in her opinion, is eager to adopt new intelligence tests.

For Naglieri, however, it is clear that there is still a great demand for intelligence tests that can help teachers better instruct children with learning problems. The challenge is convincing people that tests such as the CAS--which do not correlate highly with traditional tests--still measure something worth knowing. In fact, Naglieri believes that they measure something even more worth knowing than what the traditional tests measure. "I think we're at a really good point in our profession, where change can occur," he says, "and I think that what it's going to take is good data."

"I think we're at a really good point in our profession, where change can occur," he says, "and I think that what it's going to take is good data."

Pushing the envelope

The Kaufmans and Naglieri have worked within the testing community to effect change; their main concern is with the way tests are used, not with the basic philosophy of testing. But other reformers have launched more fundamental criticisms, ranging from "Emotional Intelligence" (Bantam Books, 1995), by Daniel Goleman, PhD, which suggested that "EI" can matter more than IQ (see article on page 52), to the multiple intelligences theory of Harvard University psychologist Howard Gardner, PhD, and the triarchic theory of successful intelligence of APA President Robert J. Sternberg, PhD, of Yale University. These very different theories have one thing in common: the assumption that traditional theories and tests fail to capture essential aspects of intelligence.

But would-be reformers face significant challenges in convincing the testing community that theories that sound great on paper--and may even work well in the laboratory--will fly in the classroom, says Nadeen Kaufman. "A lot of these scientists have not been able to operationalize their contributions in a meaningful way for practice," she explains.

"A lot of these scientists have not been able to operationalize their contributions in a meaningful way for practice," she explains.

In the early 1980s, for example, Gardner attacked the idea that there was a single, immutable intelligence, instead suggesting that there were at least seven distinct intelligences: linguistic, logical-mathematical, musical, bodily-kinesthetic, spatial, interpersonal and intrapersonal. (He has since added existential and naturalist intelligences.) But that formulation has had little impact on testing, in part because the kinds of quantitative factor-analytic studies that might validate the theory in the eyes of the testing community have never been conducted.

Sternberg, in contrast, has taken a more direct approach to changing the practice of testing. His Sternberg Triarchic Abilities Test (STAT) is a battery of multiple-choice questions that tap into the three independent aspects of intelligence--analytic, practical and creative--proposed in his triarchic theory.

Recently, Sternberg and his collaborators from around the United States completed the first phase of a College Board-sponsored Rainbow Project to put the triarchic theory into practice. The goal of the project was to enhance prediction of college success and increase equity among ethnic groups in college admissions. About 800 college students took the STAT along with performance-based measures of creativity and practical intelligence.

Sternberg and his collaborators found that triarchic measures predicted a significant portion of the variance in college grade point average (GPA), even after SAT scores and high school GPA had been accounted for. The test also produced smaller differences between ethnic groups than did the SAT. In the next phase of the project, the researchers will fine-tune the test and administer it to a much larger sample of students, with the ultimate goal of producing a test that could serve as a supplement to the SAT.

Questioning the test

Beyond the task of developing better theories and tests of intelligence lies a more fundamental question: Should we even be using intelligence tests in the first place?

In certain situations where intelligence tests are currently being used, the consensus answer appears to be "no. " A recent report of the President's Commission on Excellence in Special Education (PCESE), for example, suggests that the use of intelligence tests to diagnose learning disabilities should be discontinued.

" A recent report of the President's Commission on Excellence in Special Education (PCESE), for example, suggests that the use of intelligence tests to diagnose learning disabilities should be discontinued.

For decades, learning disabilities have been diagnosed using the "IQ-achievement discrepancy model," according to which children whose achievement scores are a standard deviation or more below their IQ scores are identified as learning disabled.

The problem with that model, says Patti Harrison, PhD, a professor of school psychology at the University of Alabama, is that the discrepancy doesn't tell you anything about what kind of intervention might help the child learn. Furthermore, the child's actual behavior in the classroom and at home is often a better indicator of a child's ability than an abstract intelligence test, so children might get educational services that are more appropriate to their needs if IQ tests were discouraged, she says.

Even staunch supporters of intelligence testing, such as Naglieri and the Kaufmans, believe that the IQ-achievement discrepancy model is flawed. But, unlike the PCESE, they don't see that as a reason for getting rid of intelligence tests altogether.

But, unlike the PCESE, they don't see that as a reason for getting rid of intelligence tests altogether.

For them, the problem with the discrepancy model is that it is based on a fundamental misunderstanding of the Wechsler scores, which were never intended to be used to as a single, summed number. So the criticism of the discrepancy model is correct, says Alan Kaufman, but it misses the real issue: whether or not intelligence tests, when properly administered and interpreted, can be useful.

"The movement that's trying to get rid of IQ tests is failing to understand that these tests are valid in the hands of a competent practitioner who can go beyond the numbers--or at least use the numbers to understand what makes the person tick, to integrate those test scores with the kind of child you're looking at, and to blend those behaviors with the scores to make useful recommendations," he says.

Intelligence tests help psychologists make recommendations about the kind of teaching that will benefit a child most, according to Ron Palomares, PhD, assistant executive director in the APA Practice Directorate's Office of Policy and Advocacy in the Schools. Psychologists are taught to assess patterns of performance on intelligence tests and to obtain clinical observations of the child during the testing session. That, he says, removes the focus from a single IQ score and allows for an assessment of the child as a whole, which can then be used to develop individualized teaching strategies.

Psychologists are taught to assess patterns of performance on intelligence tests and to obtain clinical observations of the child during the testing session. That, he says, removes the focus from a single IQ score and allows for an assessment of the child as a whole, which can then be used to develop individualized teaching strategies.

Critics of intelligence testing often fail to consider that most of the alternatives are even more prone to problems of fairness and validity than the measures that are currently used, says APA President-elect Diane F. Halpern, PhD, of Claremont McKenna College.

"We will always need some way of making intelligent decisions about people," says Halpern. "We're not all the same; we have different skills and abilities. What's wrong is thinking of intelligence as a fixed, innate ability, instead of something that develops in a context."

What Do IQ Tests Test?: Interview with Psychologist W. Joel Schneider

W. Joel Schneider is a psychologist at Illinois State University, dividing his time equally between the Clinical-Counseling program and the Quantitative Psychology program. He also runs the College Learning Assessment Service in which students and adults from the community can learn about their cognitive and academic strengths and weaknesses. His primary research interests lie in evaluating psychological evaluations. He is also interested in helping clinicians use statistical tools to improve case conceptualization and diagnostic decisions. Schneider writes Assessing Psyche, one of my favorite blogs on IQ testing and assessment. I was delighted when he agreed to do an interview with me.

He also runs the College Learning Assessment Service in which students and adults from the community can learn about their cognitive and academic strengths and weaknesses. His primary research interests lie in evaluating psychological evaluations. He is also interested in helping clinicians use statistical tools to improve case conceptualization and diagnostic decisions. Schneider writes Assessing Psyche, one of my favorite blogs on IQ testing and assessment. I was delighted when he agreed to do an interview with me.

1. What is your definition of intelligence?

At the individual level, most people define intelligence in their own image. Engineers define it in ways that describe a good engineer. Artists define it in ways that describe a great artist. Scientists, entrepreneurs, and athletes all do likewise. My definition would probably describe a good academic psychologist. There is considerable diversity in these definitions but also considerable overlap. It is the redundancy in the definitions that justifies the use of the folk term intelligence. However, the inconsistencies in the various definitions are real and thus require that the term intelligence remain ambiguous so that it meets the needs of the folk who use it.

However, the inconsistencies in the various definitions are real and thus require that the term intelligence remain ambiguous so that it meets the needs of the folk who use it.

In describing intelligence as a folk concept, I do not mean that it is a primitive idea in need of an upgrade. Many folk concepts are incredibly nuanced and sophisticated. They do not need to be translated into formal scientific concepts any more than folk songs need to be rewritten as operas. Of course, just as folk melodies have been used in operas, folk concepts and formal scientific concepts can inform one another—but they do not always need to. For this reason I want to dispense with the trope that there is something fishy about the subdiscipline of intelligence research because groups of psychologists persistently disagree on the definition of intelligence. They do not need to agree, and we should not expect them to. If they did happen to agree, the particular definition they agreed upon would be an arbitrary choice and would be non-binding on any other psychologist (or anyone else). That is the nature of folk concepts; their meanings are determined flexibly, conveniently, and collectively by the folk who use them—and folk can change their minds.

That is the nature of folk concepts; their meanings are determined flexibly, conveniently, and collectively by the folk who use them—and folk can change their minds.

Further, to say that something is a folk concept does not mean that it is not real or that it is not important; many of the words we use to describe people— polite, cool, greedy, dignified, athletic, and so forth—refer to folk concepts that most of us consider to be very real and quite important. Intelligence, too, is very real, and quite important! In fact, it is important by definition—we use the word to describe people who are able to acquire useful knowledge, and who can solve consequential problems using some combination of logic, intuition, creativity, experience, and wisdom.

See what I just did there? I tried to define intelligence with a bunch of terms that are just as vague as the thing I am trying to define. Of course, terms like useful knowledge andconsequential problems are abstractions that take on specific meanings only in specific cultural contexts. If you and I have a shared understanding of all those vague terms, though, we understand each other. If we are of the same folk, our folk concepts convey useful information.

If you and I have a shared understanding of all those vague terms, though, we understand each other. If we are of the same folk, our folk concepts convey useful information.

To say that a phenomenon is culturally bound does not imply that the phenomenon can mean anything at all or that it floats free of biology and physics. The meaning of athletic, for example, might vary greatly depending on a person’s age, sex, and past record of achievement, among many other factors. Even though the meaning of athletic varies depending on context, its meaning is still constrained to refer to skills in physical activities such as sports. Just because athleticism is a folk concept, it does not mean that it has no biological determinants. It just means that there will never be a single list of biological determinants of athleticism that apply to everyone to the same degree in all situations. However, some biological determinants will be on almost every list. Useful scientific research on what determines athletic skill is quite possible. So it is with intelligence. It is a concept that has meaning only at the intersection of person, situation, and culture; yet its meaning is stable enough that it can be measured in individuals and that useful theories about it can be constructed.

So it is with intelligence. It is a concept that has meaning only at the intersection of person, situation, and culture; yet its meaning is stable enough that it can be measured in individuals and that useful theories about it can be constructed.

Here is a particularly lucid passage from the introduction to Stern’s (1914) The Psychological Methods of Testing Intelligence:

"The objection is often made that the problem of intellectual diagnosis can in no way be successfully dealt with until we have exact knowledge of the general nature of intelligence itself. But this objection does not seem to me pertinent….We measure electro-motive force without knowing what electricity is, and we diagnose with very delicate test methods many diseases the real nature of which we know as yet very little (p. 2)."

There is no need to shoehorn scientific concepts into folk concepts like intelligence. As the science of cognitive abilities progresses, the folk concept of intelligence will change, as it is in the nature of folk concepts to do. Witness how effective Howard Gardner (1983) has been in adjusting and expanding the meaning of intelligence. Far more important than soliciting agreement among scholars on definitions is to encourage creative researchers to do their work well, approaching the topic from diverse viewpoints. Someday much later we can sort out what a consensus definition of intelligence might be if that ever seems like a good idea. For over a century, though, there has been no looming crisis over the lack of consensus on the meaning of intelligence. There may never be one.

Witness how effective Howard Gardner (1983) has been in adjusting and expanding the meaning of intelligence. Far more important than soliciting agreement among scholars on definitions is to encourage creative researchers to do their work well, approaching the topic from diverse viewpoints. Someday much later we can sort out what a consensus definition of intelligence might be if that ever seems like a good idea. For over a century, though, there has been no looming crisis over the lack of consensus on the meaning of intelligence. There may never be one.

2. What do IQ tests test?

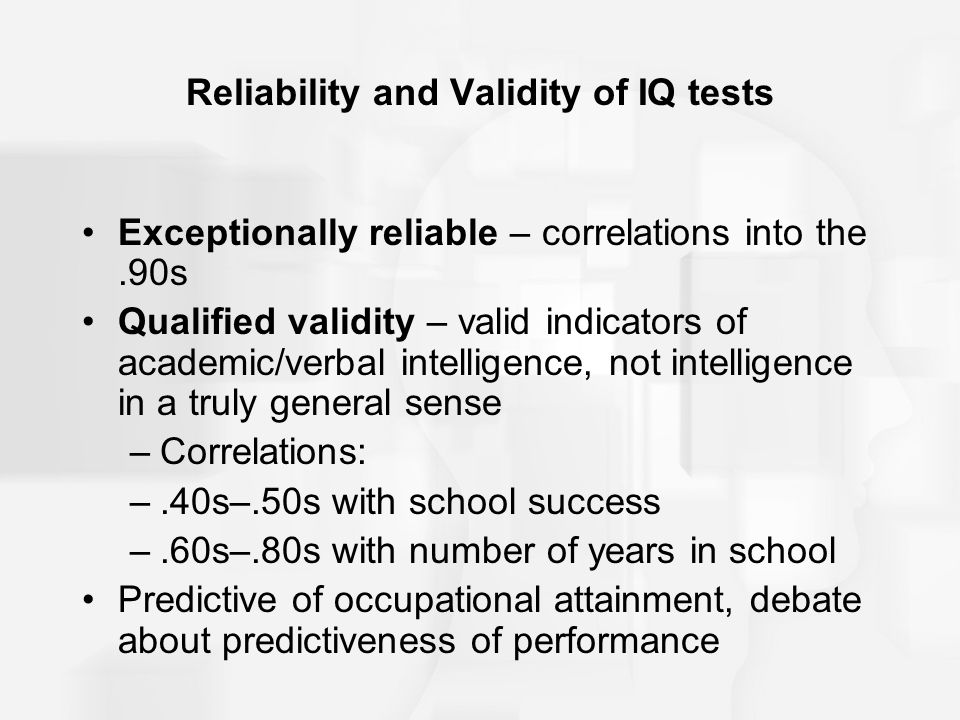

The value of IQ tests is determined more by what they correlate with than what they measure. IQ tests did not begin as operational definitions of theories that happened to correlate with important outcomes. The reason that IQ tests correlate with so many important outcomes is that they have undergone a long process akin to natural selection. The fastest way to disabuse oneself of the belief that Binet invented the first intelligence test is to read the works of Binet himself—he even shows you the test items he copied from scholars who came before him! With each new test and each test revision, good test items are retained and bad test items are dropped. Good test items have high correlations with important outcomes in every population for which the test is intended to be used. Bad items correlate with nothing but other test items. Some test items must be discarded because they have substantially different correlations with outcomes across demographic subgroups, causing the tests to be biased in favor of some groups at the expense of other groups.

Good test items have high correlations with important outcomes in every population for which the test is intended to be used. Bad items correlate with nothing but other test items. Some test items must be discarded because they have substantially different correlations with outcomes across demographic subgroups, causing the tests to be biased in favor of some groups at the expense of other groups.

So, as the old joke goes about a certain kind of French academic, “It works in practice—but will it work in theory?” I am not claiming that theory has played no role in test development, nor that theory has not hastened the process of test improvement. However, we typically do not see the tests that fail, many of which are very much theory-based. So we have successful tests and we have the ideas of successful test developers. Those ideas are likely to be approximately correct, but we do not yet have a strong theory of the cognitive processes that occur while taking IQ tests. There are, of course, many excellent studies that attempt to describe and explain what processes are involved in IQ test performance. Although this literature is large and sophisticated, I believe that we are still at the beginning stages of theory validation work.

Although this literature is large and sophisticated, I believe that we are still at the beginning stages of theory validation work.

A crude description of what a good IQ test should measure might be as follows. People need to be able learn new information. One way to estimate learning ability is to teach a person new information and measure knowledge retention. This works well for simple information (e.g., recalling word lists and retelling simple stories) but it is hard to design a test in which retention of complex information is measured (e.g., memory of a lecture on Lebanese politics) without the test being contaminated by differences in prior knowledge.

Learning ability can be estimated indirectly by measuring how much a person has learned in the past. If our purpose is to measure raw learning ability, this method is poor because learning ability is confounded by learning opportunities, cultural differences, familial differences, and personality differences in conscientiousness and openness to learning. However, if the purpose of the IQ score is to forecast future learning, it is hard to do better than measures of past learning. Knowledge tests are among the most robust predictors of performance that we have.

However, if the purpose of the IQ score is to forecast future learning, it is hard to do better than measures of past learning. Knowledge tests are among the most robust predictors of performance that we have.

Our society at this time in history values the ability to make generalizations from incomplete data and to deduce new information from abstract rules. IQ tests need to measure this ability to engage in abstract reasoning in ways that minimize the advantage of having prior knowledge of the content domain.

Good IQ tests should measure aspects of visual-spatial processing and auditory processing, as well as short-term memory, and processing speed.

3. What does a person’s global IQ score mean? If a person’s IQ score is low, do you think that means they are necessarily dumb?

IQ is an imperfect predictor of many outcomes. A person who scores very low on a competently administered IQ test is likely to struggle in many domains. However, an IQ score will miss the mark in many individuals, in both directions.

Should we be angry at the IQ test when it misses the mark? No. All psychological measures are rubber rulers. It is in their nature to miss the mark from time to time. If the score was wrong because of incompetence, we should be angry at incompetent test administrators. We should be angry at institutions that use IQ tests to justify oppression. However, if the grossly incorrect test score was obtained by a competent, caring, and conscientious clinician, we have to accept that there are limits to what can be known. Competent, caring, and conscientious clinicians understand these limits and factor their uncertainty into their interpretations and into any decisions based on these interpretations. If an institution uses test scores to make high-stakes decisions, the institution should have mechanisms in place to identify its mistakes (e.g., occasional re-evaluations).

4. Can a person be highly intelligent and still score poorly on IQ tests? If so, in what ways is that situation possible?

There are countless ways in which this can happen. Language and other cultural barriers cause intelligence tests to produce underestimates of intelligence. It is quite common to fail to get sustained optimal effort from young children and from people with a number of mental disorders. In these cases, all but the most obtuse clinicians will recognize that something is amiss and will take appropriate action (e.g., find a more appropriate test or discontinue testing until optimal effort is again possible). Unfortunately, a single obtuse clinician can do a lot of damage.

Language and other cultural barriers cause intelligence tests to produce underestimates of intelligence. It is quite common to fail to get sustained optimal effort from young children and from people with a number of mental disorders. In these cases, all but the most obtuse clinicians will recognize that something is amiss and will take appropriate action (e.g., find a more appropriate test or discontinue testing until optimal effort is again possible). Unfortunately, a single obtuse clinician can do a lot of damage.

5. What is the practical utility of IQ testing?

It is almost impossible not to be angry when we hear about incorrect decisions that result from misleading IQ scores. It is fairly common to hear members of the public and various kinds of experts to indulge in the fantasy that we can do away with standardized testing. It is easy to sympathize with their humanist yearnings and their distaste for mechanical decisions that are blind to each person’s individual circumstances. The reason that it is important to read the works of Binet is that in them we have a first-hand account of the nasty sorts of things that would likely happen if such wishes were granted.

The reason that it is important to read the works of Binet is that in them we have a first-hand account of the nasty sorts of things that would likely happen if such wishes were granted.

It seems obvious that when we are faced with a decision between doing The Wrong Thing based on false information from an IQ test and doing The Right Thing by ignoring the IQ test when it is wrong, we should do the right thing. Unfortunately, we do not live in that universe, the one in which we always know what The Right Thing is. In this universe, there is universal uncertainty, including uncertainty about what we should be uncertain about. IQ tests, error-ridden as they are, peel back a layer or two of uncertainty about what people are capable of. In the right hands, they work reasonably well. They are approximately right more often than they are grossly in error. If we did not have them, we would fall back on far more fallible means of decision making.

Much of the unease about standardized tests can be eliminated by assuring the public that few tests are used to make decisions in a truly mechanical manner. As professionals who use standardized tests, we need to communicate what it is that we actually do. For many decades, the use of holistic judgment and statistical decision rules have co-existed in a state of constant tension. This is a healthy state of affairs. Standardized tests provide a sort of anchor point for human judgment. Unaided human reason is typically very bad at calculating relevant probabilities. Without standardized tests, hard decisions about diagnosis and qualification for services will still be made, but they will be made in a more haphazard manner.

As professionals who use standardized tests, we need to communicate what it is that we actually do. For many decades, the use of holistic judgment and statistical decision rules have co-existed in a state of constant tension. This is a healthy state of affairs. Standardized tests provide a sort of anchor point for human judgment. Unaided human reason is typically very bad at calculating relevant probabilities. Without standardized tests, hard decisions about diagnosis and qualification for services will still be made, but they will be made in a more haphazard manner.

On the other hand, without reasonable safeguards that allow for human judgment, standardized tests become arbitrary tyrants. Usually when we interpret cognitive ability test data, we go with what the numbers say. Sometimes, the numbers are good first approximations of the truth but need a small adjustment. Sometimes, however, they are not the truth, not even approximately. It is our prerogative to override what the numbers say when failing to do so would be illogical, impractical, or morally outrageous. The exercise of this prerogative can, of course, become a problem of its own if it is invoked too frequently. To reinvigorate one’s capacity for mature humility, I recommend re-reading every few years the thoughts of Paul Meehl on this topic (e.g., Grove & Meehl, 1996; Meehl, 1957).

The exercise of this prerogative can, of course, become a problem of its own if it is invoked too frequently. To reinvigorate one’s capacity for mature humility, I recommend re-reading every few years the thoughts of Paul Meehl on this topic (e.g., Grove & Meehl, 1996; Meehl, 1957).

6. Why do IQ tests measure “General Knowledge” and obscure vocabulary words? Is knowledge of useless knowledge really “intelligence", or is it just useless knowledge?

If we think of IQ as an estimate of pure potential, including acquired knowledge tests in IQ is a very bad idea. We have pretty good tests that estimate various kinds of raw cognitive power (e.g., working memory tests and processing speed tests). We have reasonably good tests of reasoning ability that do not require specific content knowledge. However, if we think of IQ as prediction devices, there is no better predictor of future learning than past learning. Furthermore, past learning does not just predict future learning—it often enables it.

Well-designed knowledge tests do not just measure memory for stupid facts. Rather, they measure understanding of certain cognitive tools that facilitate reasoning and problem solving. To take an obvious example, knowledge of basic math facts (e.g., 6×7=42) enables a person to perform feats of reasoning that are otherwise impossible. In a less obvious way, knowledge of certain words, phrases, and stories facilitate reasoning. IQ test measure knowledge of well-chosen words, phrases, and stories because people with this knowledge are likely to be able to exercise better judgment in difficult situations.

Words

Certain vocabulary words allow us to communicate complex ideas succinctly and make us aware of distinctions that might otherwise escape our notice. In some cultures, personal bravery is a primary virtue and cowardice is to be avoided at all costs. In such a context, there are great advantages in having words that distinguish between admirable fearlessness (heroic, courageous, valiant) and foolish fearlessness (reckless, brash, cocksure). Perhaps even more important is the distinction between shameful fear (faint-hearted, spineless, milquetoast) and wise fear (wary, prudent, shrewd). Knowledge of such words allows a person to communicate with peers about the need for caution without being accused of cowardice. Otherwise, if there is no honorable way to talk about caution, the honorable are left with no choices but folly and self-destruction. I may be overstating my case a bit here for effect but it is no exaggeration to say that words are powerful tools. People without those tools are at a severe disadvantage.

Perhaps even more important is the distinction between shameful fear (faint-hearted, spineless, milquetoast) and wise fear (wary, prudent, shrewd). Knowledge of such words allows a person to communicate with peers about the need for caution without being accused of cowardice. Otherwise, if there is no honorable way to talk about caution, the honorable are left with no choices but folly and self-destruction. I may be overstating my case a bit here for effect but it is no exaggeration to say that words are powerful tools. People without those tools are at a severe disadvantage.

Phrases

The collective wisdom of a culture is collected in quotes (“The Nation that makes a great distinction between its scholars and its warriors will have its thinking done by cowards and its fighting done by fools.”), clichés (“Live to fight another day.”), and catchphrases (“Speak softly and carry a big stick.”). Those who do not know the meanings of proverbs (“Discretion is the better part of valor. ”) must figure things out for themselves, by trial and error (i.e., mostly error).

”) must figure things out for themselves, by trial and error (i.e., mostly error).

Proverbs, too, are tools, little cognitive enhancers. Sure, you can pound a nail with your bare hands but even the strongest hands can’t compete with a hammer. Choosing which proverb fits the situation, of course, still requires judgment. Hammers are great—but not for tightening screws.

Stories

Most events in history are immediately forgotten, even by historians. Those that are recorded tend to be important. Those that are repeated and remembered over centuries tend to contain something of central importance to the culture. For example, the phrase “Pyrrhic victory” may not be widely known or used but it has survived among educated readers because it uses an episode of history to express wisdom in an evocative and pithy way. Certain key episodes from history serve as templates for our decision-makers (e.g., Napolean’s invasion of Russia, Neville Chamberlain’s appeasement of Hitler, the Vietnam War as a “quagmire. ”). In a democracy, it is of vital importance that make of us have many good templates from which to draw. Without deep knowledge of the history of the early Roman Republic, George Washington might not have seen the wisdom of relinquishing power after two terms. Without deep appreciation for history, his contemporaries would not have called Washington “The American Cincinnatus,” renaming a new city in Ohio in his honor. It is one thing for voters to understand in the abstract that term limits are there for a good reason. For a republic to be dictator-proof, it must have a long tradition of honoring powerful and popular leaders for stepping down voluntarily.

”). In a democracy, it is of vital importance that make of us have many good templates from which to draw. Without deep knowledge of the history of the early Roman Republic, George Washington might not have seen the wisdom of relinquishing power after two terms. Without deep appreciation for history, his contemporaries would not have called Washington “The American Cincinnatus,” renaming a new city in Ohio in his honor. It is one thing for voters to understand in the abstract that term limits are there for a good reason. For a republic to be dictator-proof, it must have a long tradition of honoring powerful and popular leaders for stepping down voluntarily.

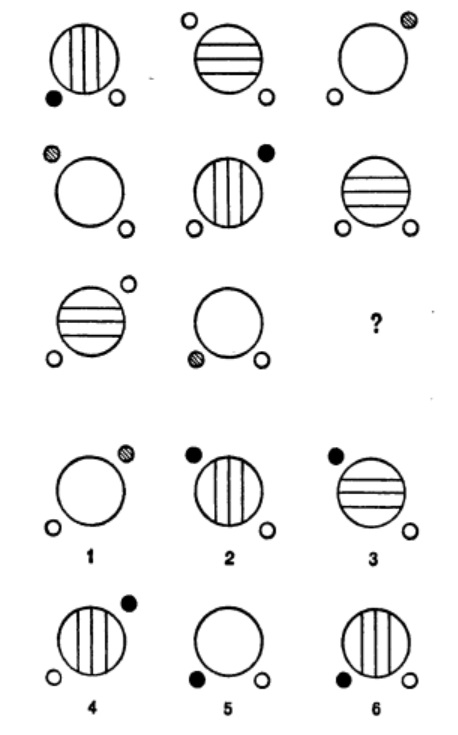

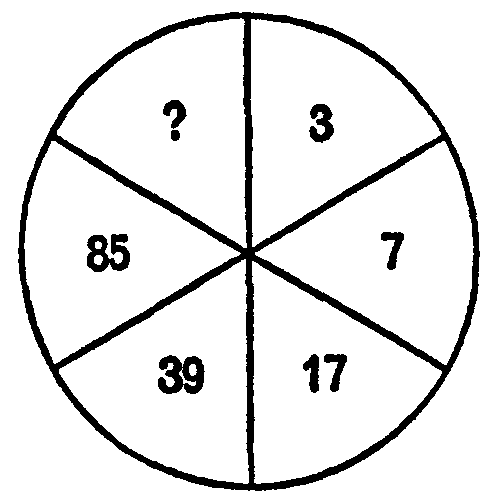

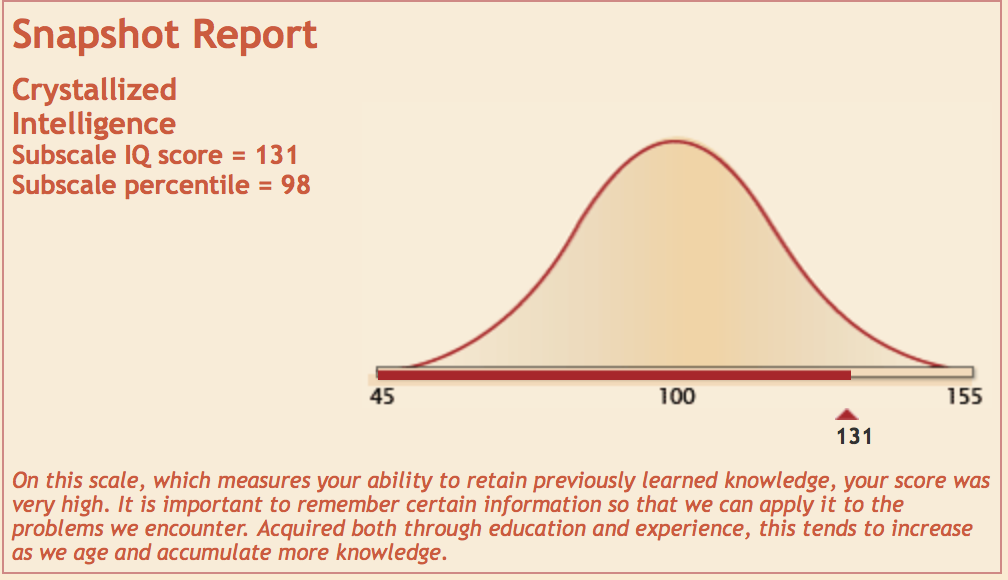

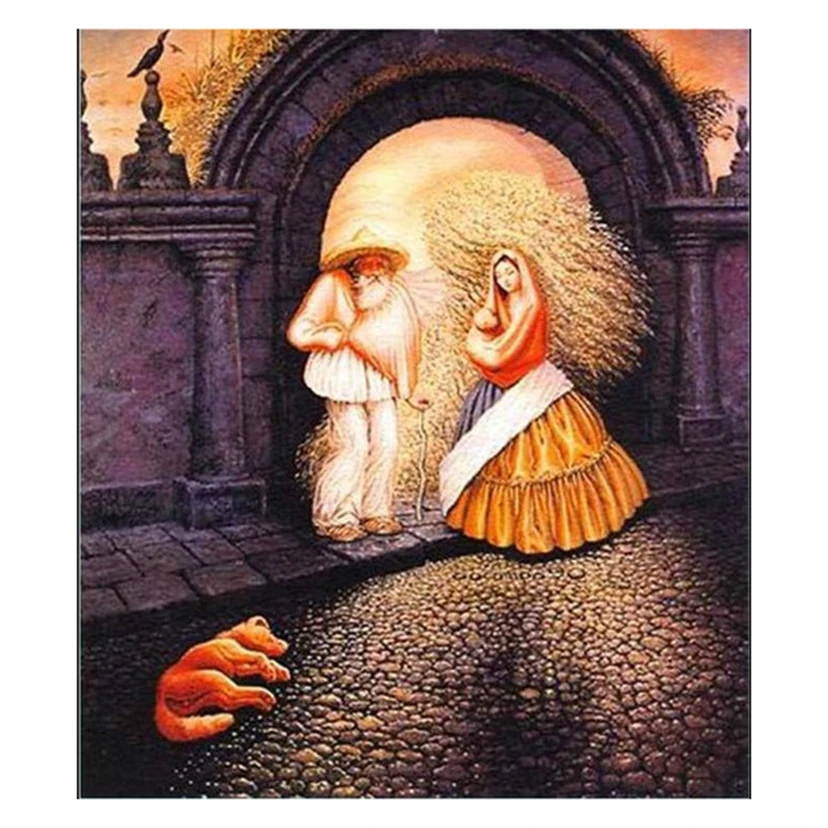

Crystallized Intelligence Visualized

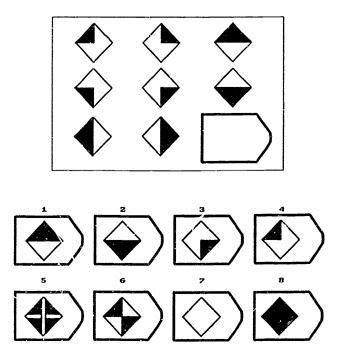

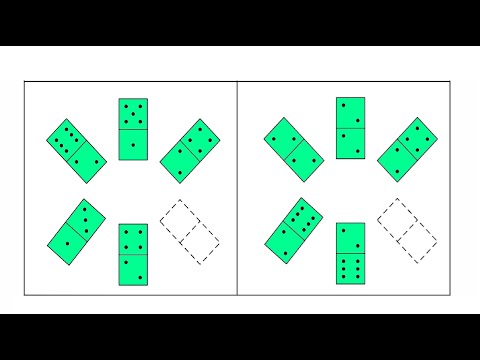

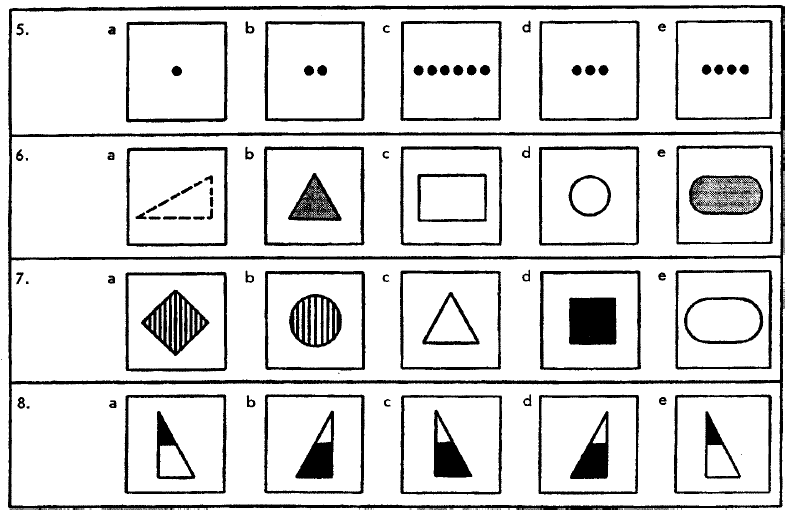

7. Do so-called measures of “fluid intelligence”— on-the-spot novel problem solving and reasoning— measure fluid intelligence to the same degree in all children?

No psychological or academic test measures anything to the same degree in all children. It is true that well-designed tests of abstract reasoning reduce the need to have specific content knowledge. However, the process of engaging in abstract reasoning is itself learned and very much influenced by culture. James Flynn has been most articulate on this point. It is right to measure abstract reasoning capacity but it is a mistake to think of the ability and willingness to engage in abstract reasoning as divorced from a number of important concrete cultural realities. Some cultures must emphasize the practical here-and-now of day-to-day survival over the what-ifs and maybes of the never-gonna-happens.

However, the process of engaging in abstract reasoning is itself learned and very much influenced by culture. James Flynn has been most articulate on this point. It is right to measure abstract reasoning capacity but it is a mistake to think of the ability and willingness to engage in abstract reasoning as divorced from a number of important concrete cultural realities. Some cultures must emphasize the practical here-and-now of day-to-day survival over the what-ifs and maybes of the never-gonna-happens.

Ancient Greek culture was very strange in its appreciation for abstraction (though not unique—India is the cradle of many an abstraction and Arabic scholars, with their placeholding Arabic numerals, gave us the ultimate tool for managing abstractions: algebra.). Truth is, most ancient Greeks probably did not care much for abstraction either. When Greek philosophers began systematically exploring the realms of abstraction, it was dangerous territory. Socrates, with his crazy questions, was seen as a real threat.

Our capacity for abstract reasoning is a recent innovation on the evolutionary time scale. As capacities go, it is a half-baked and buggy bit of software—it is fragile, inconsistent, error prone, and easily overridden by all sorts of weird biases. It is disrupted by being a little bit tired, or distracted, or drunk, or worried, or sick, or injured,…the list goes on and on. The weak link in the system is probably the extremely vulnerable working memory/attentional control mechanisms. Almost every psychological disorder, from depression to schizophrenia, is associated with deficits and inefficiencies in these systems.

Compared to the engineering marvel that is our brain’s robust visual information processing system, it was relatively easy for computer scientists to design better logic processors than the ones our brains have. Yet, in this era, those members of our society who master the tools of abstraction can leverage their advantage to acquire unprecedented levels of wealth. They also come in pretty handy for those of us who derive deep satisfaction from scientific exploration and artistic expression.

They also come in pretty handy for those of us who derive deep satisfaction from scientific exploration and artistic expression.

8. Which do you think is more important, high IQ or high intellectual curiosity?

The relationship between IQ, curiosity, discipline, and achievement is like that of length, width, depth, and volume.

9. What is the relationship between IQ and creative productivity?

"[W]hat I write is smarter than I am. Because I can rewrite it."

I was intrigued by this Susan Sontag quote that someone I follow retweeted. Then I found and fell in love with the whole essay. High IQ is nice to have and there is abundant evidence that it is substantially correlated with creative productivity. On the other hand, many people with high IQ fail to create much of anything and many people with moderate intellect achieve lasting greatness. Sontag’s insight suggests how we can transcend our limitations.

10. Do you think ADHD is overdiagnosed?

Do you think ADHD is overdiagnosed?

Many members of the public worry that ADHD is not a real disorder: it is just an excuse for lazy parents and bad teachers to medicate kids…kids who are essentially normal but maybe a little exuberant and little hard to handle. The public is right to worry! We don’t want to mislabel normal children and give them drugs they don’t need… However, ADHD IS a real disorder. If you have ever worked with a child with a severe case of it, you know that it is not merely rambunctious that is keeping the child from making friends, performing well in school, and preparing for life as an adult.

Just as we worry about mislabeling and overmedicating children who do not have ADHD, we should also worry about failing to identify children who do have ADHD. Those kids are equally mislabeled. They are called lazy. They are called unmotivated. They are called irresponsible. If they play by the rules, they are called spacey. If they don’t, they are called no-darn-good (and much much worse). In time (in many cases), these terms—lazy, unmotivated, irresponsible, and no-darn-good—these are the labels they come to accept and give to themselves. By the time they reach adulthood, they often have two or more decades of failed plans and failed relationships behind them. Their ADHD is discovered for the first time when they seek help—not for their impulsivity, not for their attention problems—but for their depression. We need to do right by all children. The current methods of assessing ADHD are clearly suboptimal, but if applied competently, work reasonably well. Currently I (along with many other scholars) am trying to find better methods of assessment of ADHD.

If they don’t, they are called no-darn-good (and much much worse). In time (in many cases), these terms—lazy, unmotivated, irresponsible, and no-darn-good—these are the labels they come to accept and give to themselves. By the time they reach adulthood, they often have two or more decades of failed plans and failed relationships behind them. Their ADHD is discovered for the first time when they seek help—not for their impulsivity, not for their attention problems—but for their depression. We need to do right by all children. The current methods of assessing ADHD are clearly suboptimal, but if applied competently, work reasonably well. Currently I (along with many other scholars) am trying to find better methods of assessment of ADHD.

11. Tell me about your software, the “Compositator”.

The Compositator, despite its silly name, was a labor of love many years in the making. It was a demonstration project of the kinds of features that I believe should be available in the next generation of test scoring and interpretation software. I hope that the next editions of the major cognitive batteries borrow from it as much as they please. The software manual lays out every equation needed.

I hope that the next editions of the major cognitive batteries borrow from it as much as they please. The software manual lays out every equation needed.

The feature that gives the Compositator its name is its ability to create custom composite scores so that all assessment data can be used more efficiently and reliably. This is a useful feature but it is far from the most important one. The major contribution of the Compositator to the art and science of psychological assessment is that it frees the clinician to ask and answer a much broader set of questions about individuals than was possible before. It can do this because it not only calculates a wealth of information about custom composite scores, but it also calculates the correlations between official and custom composite scores. This seemingly simple feature generates many new and exciting interpretive possibilities, from the use of simple regression to path analysis and structural equation modeling applied to individuals and presented in user-friendly path diagrams and interactive charts and graphs.

Traditionally, the first step in detecting a learning disorder is to show that there is a discrepancy between academic achievement and expectations, given some estimate of general reasoning ability. Whether they are aware of it or not, assessment professionals who use the predicted-achievement method to estimate expected achievement scores are using a simple regression model. A single predictor, usually IQ, is used to forecast an outcome. Unfortunately, this method typically involves numerous, unwieldy tables and tedious calculations.

The second step in the process is to identify relevant predictors (e.g., rapid automatic naming, phonological processing) that can plausibly explain the discrepancy. The Compositator gives the user the ability to select any set of predictors that are judged to be relevant to an outcome. That is, including additional predictors in the analysis should allow us to more fully explain the academic outcome and tailor the explanation to the individual. With the help of the Compositator program, the user is also able to calculate whether actual achievement is significantly lower than the predicted achievement, the estimated proportion of the population that has a discrepancy as large as the observed discrepancy, and how each of these predictors contributes to the academic outcome. Thus, the Compositator uses an individual’s WJ-III NU profile to automatically generate a large amount of information that was previously difficult or tedious to obtain.

With the help of the Compositator program, the user is also able to calculate whether actual achievement is significantly lower than the predicted achievement, the estimated proportion of the population that has a discrepancy as large as the observed discrepancy, and how each of these predictors contributes to the academic outcome. Thus, the Compositator uses an individual’s WJ-III NU profile to automatically generate a large amount of information that was previously difficult or tedious to obtain.

One innovation made possible by the Compositator is the freedom to include not only cognitive predictors but also other academic achievement variables as predictors. For example, it is possible to determine if a child’s reading comprehension problems can be plausibly explained by reading fluency or single-word decoding problems, after controlling for relevant cognitive abilities.

Going beyond basic multiple regression analysis, the Compositator allows users to examine both direct and indirect effects of different abilities using path analysis. For example, after controlling for crystallized intelligence, auditory processing has an almost insignificant direct effect on reading comprehension in almost every age group; however, it has a substantial indirect effect through single-word decoding skills. Identifying this previously hidden indirect connection between auditory processing and reading comprehension has important implications for interpretation of assessment data and for intervention planning. The Compositator can estimate “what-if” scenarios. For example, if auditory processing skills were to improve by 15 points, how many points is single-word decoding skills likely to improve and, in turn, by how many points is reading comprehension likely to increase?

For example, after controlling for crystallized intelligence, auditory processing has an almost insignificant direct effect on reading comprehension in almost every age group; however, it has a substantial indirect effect through single-word decoding skills. Identifying this previously hidden indirect connection between auditory processing and reading comprehension has important implications for interpretation of assessment data and for intervention planning. The Compositator can estimate “what-if” scenarios. For example, if auditory processing skills were to improve by 15 points, how many points is single-word decoding skills likely to improve and, in turn, by how many points is reading comprehension likely to increase?

12. What else are you currently working on?

- Humans are fantastically good at pattern recognition and making sense of complex configurations. Unfortunately, humans (including and especially me) are fantastically bad at thinking about probability.

I have created several computer programs that are used as interpretive aids for psychological evaluations. My approach is to let computers do what they do best: calculate. Human judgment is enhanced once relevant probability estimates have been calculated.

I have created several computer programs that are used as interpretive aids for psychological evaluations. My approach is to let computers do what they do best: calculate. Human judgment is enhanced once relevant probability estimates have been calculated. - I am writing a book in which I explain how psychometrics can be used to understand individuals.

- I am making software that extends the Compositator idea but making it much more flexible. I want to make it so that you can put in any SEM model and apply it to any psychological measure.

- I am doing research in which I am trying to understand why self-rated attention is so poorly correlated with cognitive measures of attention.

- I am doing a series of studies in which I hope to show that Gs (Processing Speed) = Gt (Perception Speed/Decision Time) + Attentional Fluency (The ability to direct the spotlight of attention smoothly from one thing to the next).

© 2014 Scott Barry Kaufman, All Rights Reserved.

image credit #1: my.ilstu.edu; image credit #2: assessingpsyche.wordpress.com; image credit #: assessingpyche.wordpress.com

The views expressed are those of the author(s) and are not necessarily those of Scientific American.

ABOUT THE AUTHOR(S)

Scott Barry Kaufman, Ph.D., is a humanistic psychologist exploring the depths of human potential. He has taught courses on intelligence, creativity, and well-being at Columbia University, NYU, the University of Pennsylvania, and elsewhere. He hosts The Psychology Podcast, and is author and/or editor of 9 books, including Transcend: The New Science of Self-Actualization, Wired to Create: Unravelling the Mysteries of the Creative Mind (with Carolyn Gregoire), and Ungifted: Intelligence Redefined. In 2015, he was named one of "50 Groundbreaking Scientists who are changing the way we see the world" by Business Insider. Find out more at http://ScottBarryKaufman.com. He wrote the extremely popular Beautiful Minds blog for Scientific American for close to a decade. Follow Scott Barry Kaufman on Twitter Credit: Andrew French

Follow Scott Barry Kaufman on Twitter Credit: Andrew French

Test: What is your psychological IQ?

in Psychology tests

published Sergei Nikonorov

Many of us consider ourselves experts in psychology, but it is not enough to read a few popular books and watch a series about a psychoanalyst in order to understand this complex science! If you really consider yourself an expert in psychology, this test will be elementary for you! Let's check!

-

-

10% is nonsense. We use every part of our brain

-

We only use 10% of our brain capacity

-

We know very little about how our brains work

-

We use our whole brain, but there are areas that are not clear why we need

-

Everything that happened to us is stored in our memory.

But we can't always find it

But we can't always find it

-

-

-

True

-

-

-

True

-

-

No idea

Did you like it?

210 Points

Yes No

singlepagesciencepsychologytest

Don't miss

-

in Psychology Tests

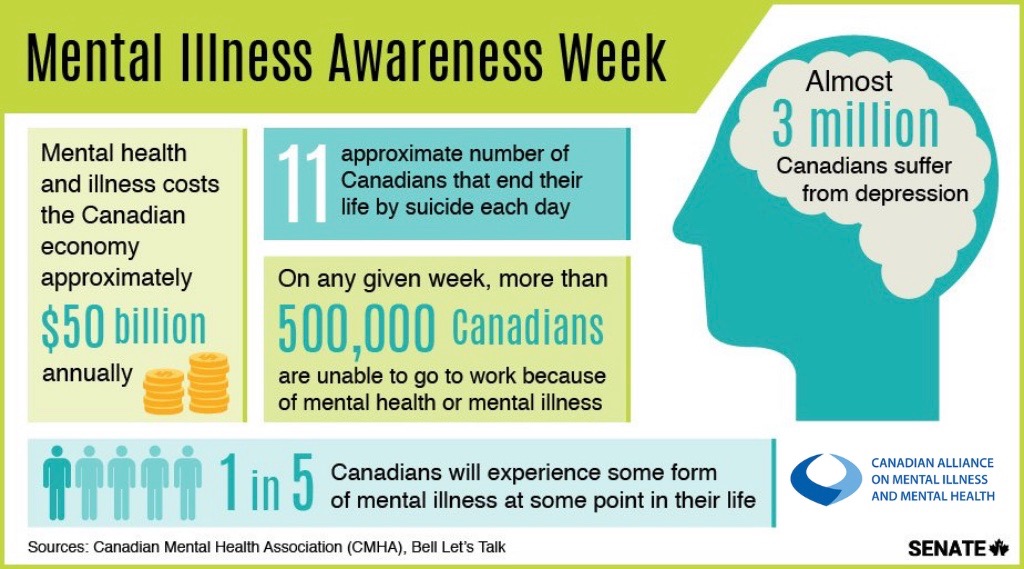

Mental illness test - mental disorders. What do you know about them?

Answer a few questions and see if you understand mental illness. Take the test Next question Take the test again More than

-

in Psychology tests

Test for knowledge of myths about the founder of psychoanalysis Sigmund Freud and his theory

Sigmund Freud in his writings attached great importance to myths.

However, his own biography eventually acquired them. We invite you to take the test and show your […] More

However, his own biography eventually acquired them. We invite you to take the test and show your […] More -

in Psychology tests

Test: Are you a good psychologist?

Perhaps the result of this test will make you think about understanding some situations. Find out more about yourself with the test! Take the test at this link if it is […] More

-

in Psychology tests

Psychological test: What is your creative power?

According to psychologists, each person is driven by his own strength, which helps him to gather his courage in the most difficult situation. This quiz will help you discover the […] More

-

in Psychology tests

Test: Do you know psychology?

We offer you a simple test that will show if you understand psychology. In this test, you will be asked questions about the history of psychology, as well as […] More

-

in Psychology tests

Test: Can you guess the author's mental disorder from his drawing?

Drawings of mentally ill people allow doctors to understand what is happening in the minds of patients, and healthy people to look at the world with different eyes.

Can you […] More

Can you […] More

Test: Find out what is your psychological IQ?

This article was created by Onedio. There were no changes from the editorial side. You can also create your own articles on our website.

Onedio Favorites > Tests, Interesting-lucky_lucy Onedio user

Consider yourself an expert in psychology? Let's check!

Source: http://www.playbuzz.com/samanthajones11/...

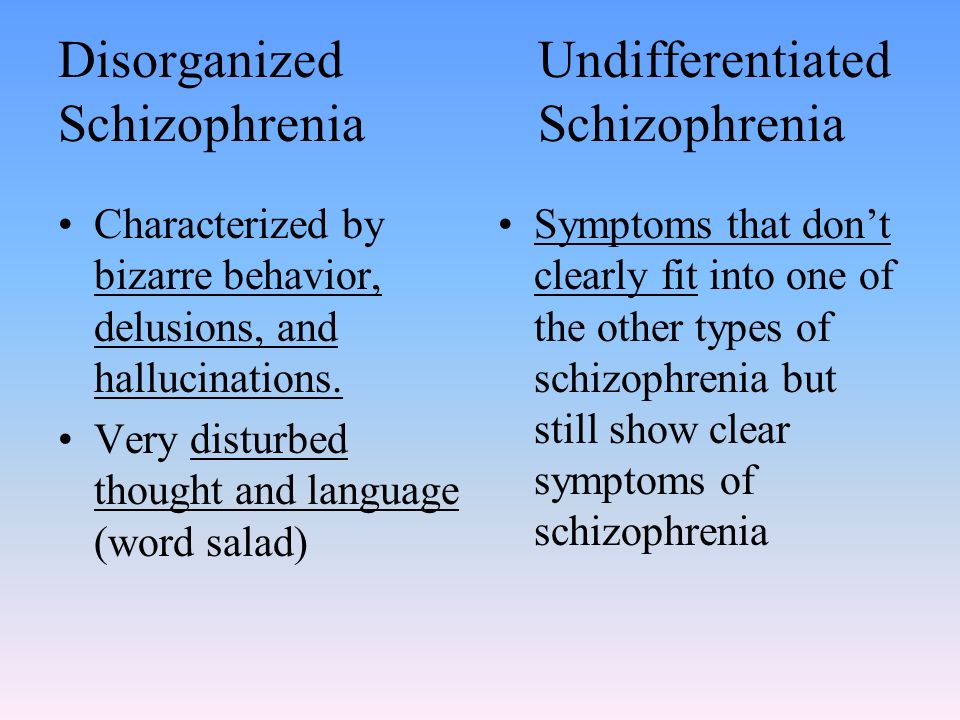

1. Let's start: schizophrenia is...?

Conflict in thoughts, behavior and emotions

Voices in the head

Severe mood swings and outbursts of violence

Having multiple personalities in one person

2.

What attracts people when they first meet?

What attracts people when they first meet? Opposites attract

Shared beliefs and values

Body chemistry

My strengths compensate for my partner's weaknesses

3. Lie detector...?

Often wrong

Tells 90% of the truth

Can only study psychopaths

Shows only 50% of the truth

4. Which statement is true about your brain?

We use our brain to the fullest, but there are still areas that have not been studied

We use all parts of our brain

We use only 10% of our brain capacity

We know almost nothing about how our brain works

5. When people are under hypnosis, do they...?

Like obedient robots

As if awakened by an alarm clock

As if drunk

Are in a deep sleep

6. Is the best way to cope with anger - playing sports?

False

True

7. Is a happy worker a productive worker?

True

False

8.

Are attractive people judged by others as more successful and smart?

Are attractive people judged by others as more successful and smart? No

Yes

No idea

9. What is the name of the phenomenon when a hostage shows understanding towards his kidnapper?

No idea

Norwegian Syndrome

Copenhagen Syndrome

Stockholm Syndrome

None of the above

10. Last question: in a dark room, a point of light will appear to move. This illusion is called...?

Purkinje reflex

Autokinetic illusion

Ponzo Illusion

Optical Motion Theory

Wow! Your psychological IQ is 160!

You know more about psychology than most people around you.

Wow! Your psychological IQ is 200!

You can safely consider yourself a psychologist.

Your psychological IQ is 120!

You should consider becoming a professional psychologist

Your psychological IQ is 70!

You should brush up on your knowledge of psychology.