Frontal lobotomy history

Violence, mental illness, and the brain – A brief history of psychosurgery: Part 1 – From trephination to lobotomy

- Journal List

- Surg Neurol Int

- v.4; 2013

- PMC3640229

Surg Neurol Int. 2013; 4: 49.

Published online 2013 Apr 5. doi: 10.4103/2152-7806.110146

Author information Article notes Copyright and License information Disclaimer

Psychosurgery was developed early in human prehistory (trephination) as a need perhaps to alter aberrant behavior and treat mental illness. The “American Crowbar Case” provided an impetus to study the brain and human behavior. The frontal lobe syndrome was avidly studied. Frontal lobotomy was developed in the 1930s for the treatment of mental illness and to solve the pressing problem of overcrowding in mental institutions in an era when no other forms of effective treatment were available.

Lobotomy popularized by Dr. Walter Freeman reached a zenith in the 1940s, only to come into disrepute in the late 1950s. Other forms of therapy were needed and psychosurgery evolved into stereotactic functional neurosurgery. A history of these developments up to the 21st century will be related in this three-part essay-editorial, exclusively researched and written for the readers of Surgical Neurology International (SNI).

Keywords: Frontal lobes, institutionalization, lobotomy, mentally ill, psychosurgery, trephination

Trephination (or trepanation) of the human skull is the oldest documented surgical procedure performed by man. Trephined skulls have been found from the Old World of Europe and Asia to the New World, particularly Peru in South America, from the Neolithic age to the very dawn of history.[3,14,18] [Figures and ] We can speculate why this skull surgery was performed by shamans or witch doctors, but we cannot deny that a major reason may have been to alter human behavior – in a specialty, which in the mid 20th century came to be called psychosurgery!

Open in a separate window

Prehistoric adult female cranium from San Damian, Peru (unhealed trepanation). Smithsonian Institution

Smithsonian Institution

Open in a separate window

Prehistoric adult female cranium from Cinco Cerros, Peru (unhealed trepanation). Smithsonian Institution

Surely we can surmise that intractable headaches, epilepsy, animistic possession by evil spirits, or mental illness, expressed by errant or abnormal behavior could have been indications for surgical intervention prescribed by the shaman of the late Stone or early Bronze Age. Dr. William Osler asserted, “[Trephination] was done for epilepsy, infantile convulsions, headache and various cerebral diseases believed to be caused by confined demons to whom the hole gave a ready method of escape.”[12] Once sanctioned by the tribe, the medicine man of the pre-Inca, Peruvian civilization, could incise the scalp with his surgical knife and apply his tumi to the skull to ameliorate the headaches or release the evil spirits possessing the hapless tribesman []. And amazingly many of these patients of prehistoric times survived the surgery, at least for a time, as evidenced by bone healing at the edges of the trephined skulls that have been found by enterprising archeologists[3,14] []. We do not know if the patients’ clinical symptoms improved or if their behavior was modified after these prehistoric operations [].

We do not know if the patients’ clinical symptoms improved or if their behavior was modified after these prehistoric operations [].

Open in a separate window

Ceremonial tumi. Pre-Inca culture. Birmingham Museum of Art

Open in a separate window

Prehistoric adult male cranium from Cinco Cerros, Peru (healed trephination). Smithsonian Institution

Open in a separate window

Peruvian trephined and bandaged skull from Paracas, Nasca region, A.D. 500. CIBA Symposia, 1939; reproduced in E.A. Walker's A History of Neurological Surgery, 1967

In ancient Greece and Rome, many medical instruments were designed to penetrate the skull. The Roman surgeons developed the terebra serrata, which was used to perforate the cranium by rolling the instrument between the surgeon's hands []. Both the great physicians and surgeons, Celsus (c. 25 BC – c. AD 50) and Galen (c. AD 129 – c. AD 216) used these instruments. It is easy to see that the terebra was the forerunner of the manual burr hole and electric drill neurosurgeons use today for craniotomy procedures.

Open in a separate window

Ancient Roman surgeons used the types of terebras illustrated here for perforating the cranium in surgical procedures

During the Middle Ages and the Renaissance, trepanation was performed not only for skull fractures but also for madness and epilepsy. There are telltale works of art from this period that bridge the gap between descriptive art and fanciful surgery. For example, we find the famous oil painting by Hieronymus Bosch (c. 1488-1516) that depicts “The Extraction of the Stone of Madness” []. Likewise, the triangular trephine instrument designed by Fabricius of Aquapendente (1537-1619) was subsequently used for opening and entering the skull; triangular trephines had already been modified for elevating depressed skull fractures. And thus, we find variations of the famous engraving by Peter Treveris (1525), illustrating surgical elevation of skull fractures, in many antiquarian medical textbooks[18] [].

Open in a separate window

The Extraction of The Stone of Madness (or The Cure of Folly) by Hieronymus Bosch. Museo del Prado, Madrid, Spain

Museo del Prado, Madrid, Spain

Open in a separate window

Peter Treveris’ engraving of trephination instrument in the Renaissance (1525)

For most of man's recorded history, the mentally ill have been treated as pariahs, ostracized by society and placed in crowded hospitals, or committed to insane asylums.[7] Criminals were dealt with swiftly and not always with justice or compassion. Despite the advent of more humane treatment in the late 19th century, hospitals for the mentally ill were poorly prepared to cope with the medical and social problems associated with mental illness and remained seriously overcrowded [].

Open in a separate window

St. Bethlehem Hospital in London (Bedlam), which opened in 1247, was the first institution dedicated to the care and treatment of the mentally ill

The early concept of cerebral localization (i.e., aphasia, hemiplegia, etc.) was derived first from the study of brain pathology, particularly cerebral tumors and operations for their removal;[9,16] and second, from the observation of dramatically altered behavior in a celebrated case of traumatic brain injury to the frontal lobe that has come to be called the “American Crowbar Case. ”[11,19]

”[11,19]

In 1848, Phineas P. Gage, a construction foreman at the Rutland and Burlington Railroad, was severely injured while helping construct a railway line near the town of Cavendish, Vermont. The premature explosion propelled a tamping iron — a long bar measuring 3 feet 7 inches in length and 1.25 inch in diameter – through Gage's head. The 13.25 pound rod penetrated his left cheek, traversed the midline, the left frontal lobe, and exited the cranium just right of the midline near the intersection of the sagittal and coronal sutures [].

Open in a separate window

Phineas Gage case, trajectory of iron rod through cranium

The 25-year-old Gage survived, but the mental and behavioral changes noted by his doctor, friends, and co-workers were significant. From being a motivated, energetic, capable, friendly, and conscientious worker, Gage changed dramatically into an obstinate, irreverent, irresponsible, socially uninhibited individual. These changes in personality would later be recognized as the frontal lobe syndrome. In the case of Gage, these changes were noted almost immediately after the injury. Close attention was paid to the case by Gage's physician, Dr. John M. Harlow, not necessarily because of the personality changes but because the patient had survived such an extensive and serious injury and surgical ordeal.

In the case of Gage, these changes were noted almost immediately after the injury. Close attention was paid to the case by Gage's physician, Dr. John M. Harlow, not necessarily because of the personality changes but because the patient had survived such an extensive and serious injury and surgical ordeal.

The case received notoriety in the medical community when it was thoroughly described by Dr. Harlow 20 years later in an otherwise obscure medical journal. The doctor wrote: “Previous to his injury, although untrained in the schools, he possessed a well-balanced mind, and was looked upon by those who knew him as a shrewd, smart businessman, very energetic and persistent in executing all his plans of operation. In this regard his mind was radically changed, so decidedly that his friends and acquaintances said he was ‘no longer Gage.’”[8]

Dr. Harlow followed his patient's travels across North and South America, and when Gage died in status epilepticus in 1860 near San Francisco, the doctor was able to retrieve the exhumed skull and tamping iron. Gage had continued to carry the rod wherever he went, his “constant companion” []. The ghastly items were donated to Harvard medical school's Warren Anatomical Museum in 1868 after Dr. Harlow had finished his classic paper. The skull and tamping iron remain there to this day.

Gage had continued to carry the rod wherever he went, his “constant companion” []. The ghastly items were donated to Harvard medical school's Warren Anatomical Museum in 1868 after Dr. Harlow had finished his classic paper. The skull and tamping iron remain there to this day.

Open in a separate window

Phineas Gage with his tamping iron (c. 1860)

The historic operation that we can arguably describe as the first psychosurgery procedure was performed by psychiatrist Dr. Gottlieb Burckhardt (1836-1907) in Switzerland in 1888. Burckhardt removed an area of cerebral cortex that he believed was responsible for his patient's abnormal behavior. He followed this by performing selective resections mostly in the temporal and parietal lobes on six patients, areas in the lobes that Burckhardt considered responsible for his patients’ aggressive behavior and psychiatric disorder. His report was not well received by his colleagues and met with disapproval by the medical community. Subsequently, Burckhardt ceased work in this area. [15]

[15]

The Second International Neurologic Congress held in London in 1935 was a landmark plenary session for psychosurgery. American physiologist Dr. John F. Fulton (1899-1960) presented a momentous experiment in which two chimpanzees had bilateral resections of the prefrontal cortex. These operations were pioneering experiments in the field because the animals became “devoid of emotional expression” and were no longer capable of arousal of the “frustrational behavior” usually seen in these animals. The behavioral change was noted but the full implications were not. These findings would become very important decades later when social scientists in the 1970s noted that aggressive behavior and rage reaction were associated with low tolerance for frustration in individuals with sociopathic tendencies.[2]

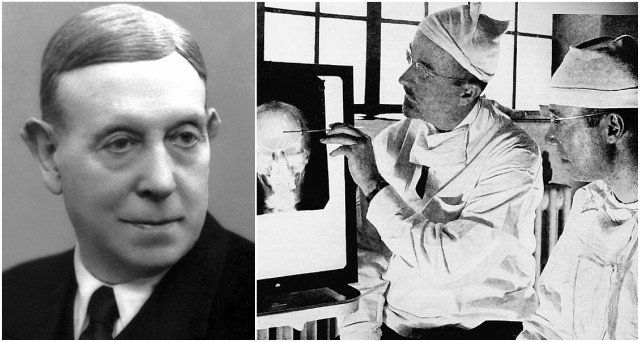

This Congress was historic also because it was attended by personages who would leave marks in the history of psychosurgery, the neurosurgical treatment of mental disorders. Among the participants were two Portuguese neuroscientists: Dr. Antonio Egas Moniz (1874-1955), Professor of Neurology at the University of Lisbon, and his collaborator, the neurosurgeon Dr. Almeida Lima (1903-1985). They worked together in performing frontal leucotomies for psychiatric illnesses in the 1930s. In fact, Dr. Moniz's efforts supported the work of physiologist John Fulton that frontal lobe ablation subdued the behavior of aggressive chimpanzees. Also attending this Congress was American neurologist Dr. Walter Freeman (1895-1972), who would soon leave a big imprint in the march of psychosurgery in the form of the frontal lobotomies.[13,15]

Antonio Egas Moniz (1874-1955), Professor of Neurology at the University of Lisbon, and his collaborator, the neurosurgeon Dr. Almeida Lima (1903-1985). They worked together in performing frontal leucotomies for psychiatric illnesses in the 1930s. In fact, Dr. Moniz's efforts supported the work of physiologist John Fulton that frontal lobe ablation subdued the behavior of aggressive chimpanzees. Also attending this Congress was American neurologist Dr. Walter Freeman (1895-1972), who would soon leave a big imprint in the march of psychosurgery in the form of the frontal lobotomies.[13,15]

But there was more. Dr. R. M. Brickner described a patient with bilateral frontal lobectomies for excision of tumor. Postoperatively his patient showed a lack or restraint and social disinhibition, providing further evidence of the frontal lobe syndrome.[15]

Frontal lobotomy, the sectioning of the prefrontal cortex, and leucotomy, the severing of the underlying white matter, for the treatment of mental disorders, reached a peak of popularity after World War II. But, as we have seen, development of this surgery began in the 1930s with the work of the celebrated Egas Moniz, who also made his mark in neuroradiology as the father of cerebral angiography []. Moniz and Lima performed their first frontal leucotomy in 1935. The following year Dr. Moniz presented a series of 20 patients, and by 1949 he had received the Nobel Prize for his pioneering work on frontal leucotomy in which, specifically, the white matter connections between the prefrontal cortex and the thalamus were sectioned to alleviate severe mental illness, including depression and schizophrenia in long-term hospitalized patients.

But, as we have seen, development of this surgery began in the 1930s with the work of the celebrated Egas Moniz, who also made his mark in neuroradiology as the father of cerebral angiography []. Moniz and Lima performed their first frontal leucotomy in 1935. The following year Dr. Moniz presented a series of 20 patients, and by 1949 he had received the Nobel Prize for his pioneering work on frontal leucotomy in which, specifically, the white matter connections between the prefrontal cortex and the thalamus were sectioned to alleviate severe mental illness, including depression and schizophrenia in long-term hospitalized patients.

Open in a separate window

Professor of Neurology, Dr. Antonio Egas Moniz (1874-1955)

It was at this time that a constellation of symptoms finally became associated with frontal lobe damage and removal – for example, distractibility, euphoria, apathy, lack of initiative, lack of restraint, and social disinhibition. Some of these symptoms were reminiscent of the personality changes noted by Dr. John M. Harlow in Phineas Gage nearly three quarters of a century earlier.

John M. Harlow in Phineas Gage nearly three quarters of a century earlier.

It must be remembered that this movement toward surgical intervention did not occur in a vacuum. It was engendered at a time when drug therapy was not available, and it involved mostly severely incapacitated patients for whom psychotherapy was ineffective or unavailable. Physicians, particularly psychiatrists and neurologists, those directly taking care of these unfortunate patients, were pushed against the wall to come up with effective therapy to modify abnormal psychiatric behavior and ameliorate mental suffering.

The problem of the increasing number of mentally ill patients for which no effective treatment was available, except for long-term hospitalization and confinement, had been noted since the 19th century, and by the early 20th century the problem had reached gigantic proportions. Psychotropic drugs were not available until the 1950s, and in their absence, the only treatment options used in conjunction with long-term hospitalization were physical restraint with the feared strait jackets, isolation in padded cells, etc. , – i.e., conditions almost reminiscent of the notorious Bedlam Hospital of 19th century London.

, – i.e., conditions almost reminiscent of the notorious Bedlam Hospital of 19th century London.

Recently, Dr. R. A. Robison and colleagues summarized the socioeconomic context in which psychosurgery was advanced in the late 1930s by citing a 1937 report on the extent of institutionalization of the mentally ill in the United States: “There were more than 450,000 patients institutionalized in 477 asylums, with nearly one half of them hospitalized for five years or longer.” The cost in today's dollars is estimated to have exceeded $24 billion.[15]

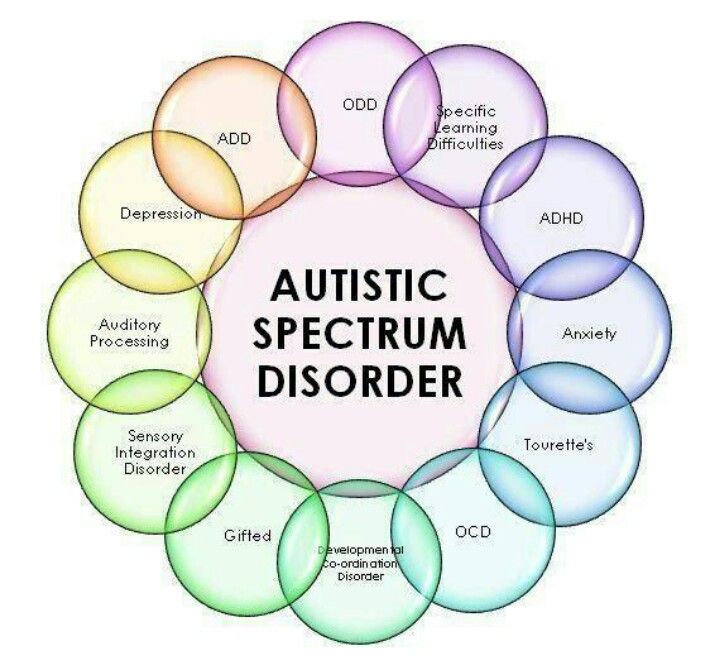

Psychotherapy, not to mention psychoanalysis, as proposed by Sigmund Freud (1856-1939), Carl Jung (1875-1961), and other prominent psychiatrists, was beyond the reach of most patients afflicted with psychiatric disorders until much later in the century. Advances in medicine and psychiatry in the latter part of the century would bring relief to untold millions but many problems even then would still persist.[2,5,15,17]

The leading lights of psychiatry at the turn of the century, Austrian psychoanalyst Sigmund Freud and German psychiatrist Emil Kraepelin (1856-1926) had conflicting approaches to mental illness. Freud recommended psychotherapy, often ineffective, almost always unfeasible in severely mentally ill patients, in which diseases ranged from tertiary syphilis and severe anxiety-neuroses to agitated depression and schizophrenia. Kraepelin, in contrast, preferred more aggressive intervention with electroconvulsive therapy (ECT) and insulin shock therapy []. But what was to be done with patients who did not respond to these treatments, relapsed, or continued to pose a danger to themselves and others? That was the problem facing physicians at this time, particularly psychiatrists and neurologists. That also explains the fact the earliest “psychosurgeons” came disproportionally from these two specialties.

Freud recommended psychotherapy, often ineffective, almost always unfeasible in severely mentally ill patients, in which diseases ranged from tertiary syphilis and severe anxiety-neuroses to agitated depression and schizophrenia. Kraepelin, in contrast, preferred more aggressive intervention with electroconvulsive therapy (ECT) and insulin shock therapy []. But what was to be done with patients who did not respond to these treatments, relapsed, or continued to pose a danger to themselves and others? That was the problem facing physicians at this time, particularly psychiatrists and neurologists. That also explains the fact the earliest “psychosurgeons” came disproportionally from these two specialties.

Open in a separate window

Famous German psychiatrist, Dr. Emil Kraepelin (1856-1926)

So it was in the mid 1930s under the circumstances of ineffectual treatments and hospital overcrowding that Moniz and Lima began to collaborate and carry out their work in Lisbon, Portugal. They developed the frontal leucotomy (or leukotomy), sectioning the white matter connections between the prefrontal cortex and the thalamus. First, they used alcohol injections; subsequently they introduced the leucotome and termed the procedure frontal leucotomy. They reported that their patients were more calm and manageable but their affect more blunted after the operations.

They developed the frontal leucotomy (or leukotomy), sectioning the white matter connections between the prefrontal cortex and the thalamus. First, they used alcohol injections; subsequently they introduced the leucotome and termed the procedure frontal leucotomy. They reported that their patients were more calm and manageable but their affect more blunted after the operations.

In the United States, Dr. James W. Watts (1904-1994), a neurosurgeon at George Washington University, was invited to collaborate with American neurologist and neuropathologist Dr. Walter Freeman (1895-1972) in developing the transorbital lobotomy in the early 1940s [].

Open in a separate window

American psychiatrist, Dr. Walter Freeman (1895-1972)

They performed the first frontal lobotomy in the U.S. in 1936 the same year Moniz presented his series of 20 patients from Portugal. With his knowledge of neuropathology, Freeman was able to visualize and analyze retrograde degeneration in postmortem examination of patients who had undergone the procedure and died later. He modified Moniz's leucotome for better precision in targeting specific frontal lobe-thalamic tracts. Patients with affective disorders had their leucotomy more anterior, whereas in those afflicted with more severe schizophrenic symptoms the lobotomy was more posterior.

He modified Moniz's leucotome for better precision in targeting specific frontal lobe-thalamic tracts. Patients with affective disorders had their leucotomy more anterior, whereas in those afflicted with more severe schizophrenic symptoms the lobotomy was more posterior.

In 1942, Freeman and Watts published the first edition of their classic monograph Psychosurgery and reported on 200 patients: 63% were improved; 23% had no improvement: and 14% were worsened or succumbed to their surgery.[6,15] That same year the Journal of the American Medical Association (JAMA) published an editorial supporting the basis for the procedure and the indications for lobotomies. Additional prestige for the operation was gained when Moniz received the Nobel Prize for Physiology or Medicine “for the discovery of the therapeutic value of leucotomy in certain psychoses” in 1949.[10]

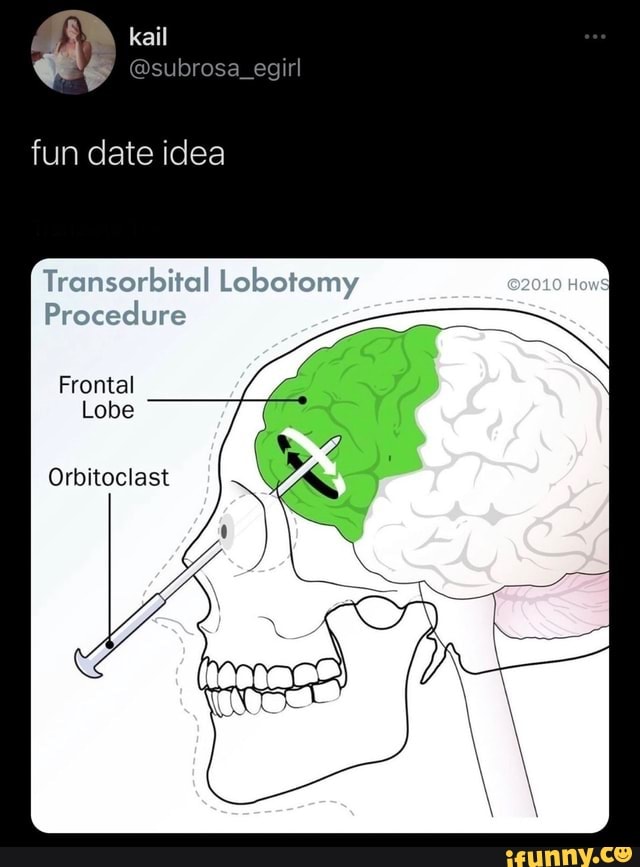

Freeman had used alcohol injections initially in some of his lobotomies, but he subsequently modified his procedure of transorbital leucotomy by using a modified ice-pick instrument to traverse the roof of the orbit and enter the base of the skull. This was frequently done with local anesthesia or with sedation following ECT. The orbitoclast was then inserted to a depth of 7 cm at the base of the frontal lobes and swept 15° laterally.[15] Freeman hoped that physicians would use this simple technique to treat hospitalized patients widely []. Instead, the lack of sterile techniques and the crudity of the procedure disenchanted Watts, his neurosurgical associate, and also antagonized the neurosurgical establishment.[13] Many physicians became disappointed with the results, as some patients developed complications or died. But the majority of patients were improved, severe symptoms ameliorated, most families were gratified, and institutionalized patients became more manageable in the various institutions.[6,10,13,15]

This was frequently done with local anesthesia or with sedation following ECT. The orbitoclast was then inserted to a depth of 7 cm at the base of the frontal lobes and swept 15° laterally.[15] Freeman hoped that physicians would use this simple technique to treat hospitalized patients widely []. Instead, the lack of sterile techniques and the crudity of the procedure disenchanted Watts, his neurosurgical associate, and also antagonized the neurosurgical establishment.[13] Many physicians became disappointed with the results, as some patients developed complications or died. But the majority of patients were improved, severe symptoms ameliorated, most families were gratified, and institutionalized patients became more manageable in the various institutions.[6,10,13,15]

Open in a separate window

Dr. Walter Freeman, “The Lobotomist,” at work

Nevertheless, Freeman and Watts parted and went separate ways. Freeman became a relentless crusader and performed over 4000 lobotomies. Robison et al. thus summarized the situation in a recent review article: “Despite resistance and reservations in the broader medical community, many psychiatrists and nonsurgical practitioners seized on the procedure as a new last resort for patients who lacked any effective alternate treatments.” Frontal lobotomy became a widely used procedure in the U.S., and an estimated 60,000 procedures were performed in the U.S. and in Europe between 1936 and 1956.[15]

Robison et al. thus summarized the situation in a recent review article: “Despite resistance and reservations in the broader medical community, many psychiatrists and nonsurgical practitioners seized on the procedure as a new last resort for patients who lacked any effective alternate treatments.” Frontal lobotomy became a widely used procedure in the U.S., and an estimated 60,000 procedures were performed in the U.S. and in Europe between 1936 and 1956.[15]

By 1952, one of the pioneers of psychosurgery, the famed American physiologist John Fulton, was announcing the end of lobotomy and ushering in the beginning of stereotactic and functional neurosurgery because of improved precision and less cerebral tissue ablated. Chlorpromazine, which had been introduced in Europe in 1953, became available in the U.S. in 1955; haloperidol followed in 1967, and the drug therapy revolution was now also underway.[1] In 1971, Freeman published his long-term follow up of 707 schizophrenics, 4-30 years after lobotomy. He reported that despite improvement in the majority, 73% were still hospitalized or at home in a “state of idle dependency.”[10]

He reported that despite improvement in the majority, 73% were still hospitalized or at home in a “state of idle dependency.”[10]

The need to modify abnormal behavior and ameliorate mental suffering persists in our time. It has been estimated that 5 out of 10 cases of disability worldwide are attributable to mental disorders. And major depression heads the list of these psychiatric or psychological disorders with an incidence of 12-18% projected for the lifetime of the individual of the 21st century.[15] As many as one-third of these afflicted patients have intractable depression unresponsive to antidepressant drugs or psychotherapy. We need not be reminded also that the vast majority of patients who commit suicide have suffered untreated depression or severe melancholia unresponsive to treatment.

Despite psychotropic drugs, ECT, lobotomy, and psychosurgery, the societal problems posed by the prevalence of mental illness persist to this day. The steady institutionalization of the 1930s was followed by rapid deinstitutionalization in the 1970s. In 1963, U.S. President John F. Kennedy proposed a program for dealing with the persistent, unresolved problems of the mentally ill and the growing socioeconomic concerns of their long-term institutionalization. Kennedy called for the formation of community mental health centers (CMHCs) funded by the federal government. Outpatient clinics replaced state hospitals and long-term institutionalization. Over the next 17 years, the federal government funded 789 CMHCs, spending $20.3 billion in today's dollars. “During those same years, the number of patients in state mental hospitals fell by three quarters – to 132,164 from 504,604,” laments Dr. E. Fuller Torrey, a noted psychiatrist and schizophrenia researcher. And he adds: “Those beds were closed down.”[17]

In 1963, U.S. President John F. Kennedy proposed a program for dealing with the persistent, unresolved problems of the mentally ill and the growing socioeconomic concerns of their long-term institutionalization. Kennedy called for the formation of community mental health centers (CMHCs) funded by the federal government. Outpatient clinics replaced state hospitals and long-term institutionalization. Over the next 17 years, the federal government funded 789 CMHCs, spending $20.3 billion in today's dollars. “During those same years, the number of patients in state mental hospitals fell by three quarters – to 132,164 from 504,604,” laments Dr. E. Fuller Torrey, a noted psychiatrist and schizophrenia researcher. And he adds: “Those beds were closed down.”[17]

In the ensuing half century, these patients were discharged onto the streets of America, without family support and suffering from severe mental illness — including schizophrenia, bipolar and personality disorders, and criminal insanity. Half of them fare poorly.[2,4,5,17] Untreated mentally ill people in 2013, reports Dr. Torrey, are responsible for 10% of all homicides, constitute 20% of jail and prison inmates, and at least 30% of the homeless in the U.S.A. These homeless psychiatric patients spill over from the streets and city parks into the emergency rooms, libraries, and bus transit and train stations. Many of them join the ranks of the criminally insane and contribute significantly to the alarming statistics of crime and violence in America [].

Half of them fare poorly.[2,4,5,17] Untreated mentally ill people in 2013, reports Dr. Torrey, are responsible for 10% of all homicides, constitute 20% of jail and prison inmates, and at least 30% of the homeless in the U.S.A. These homeless psychiatric patients spill over from the streets and city parks into the emergency rooms, libraries, and bus transit and train stations. Many of them join the ranks of the criminally insane and contribute significantly to the alarming statistics of crime and violence in America [].

Open in a separate window

Mentally ill, homeless, and dangerous. New York Post, December 10, 2012

And so the problem of mental illness persists, influenced more by politics than mental health data and sound criminologic and sociologic scholarship.[4,5,17] Mental health deinstitutionalization poured the mentally ill onto the streets of America. The meek get preyed upon; the violent commit petty or serious crimes. Nevertheless, it should be pointed out that most mentally ill individuals are not violent but in need of medical care, compassion, and humanitarian assistance [].

Open in a separate window

The mentally ill, including paranoid schizophrenics and violent individuals roaming the cities of America contribute to the homeless, and they themselves become the subject of abuse or the perpetrators of crime in part due to their mental illness. New York Post, September 2009

The social sciences in general and psychiatry in particular, despite the continued technological advances of our computer age, have not been able to keep up with the mounting psychological problems associated with this societal progress. Violent and criminal behavior, particularly when associated with repetitive, unprovoked aggression and low threshold rage reactions, also appear to be pernicious and recalcitrant elements of modern society, elements that have been difficult to explain and solve by our social scientists. Part 2 discusses new forms and methods of psychosurgery via stereotactic functional neurosurgery and advances in neuroscience. Part 3 will conclude the essays.

Available FREE in open access from: http://www.surgicalneurologyint.com/text.asp?2013/4/1/49/110146

1. Brill H. Moderator. Chlorpromazine and mental health. Philadelphia: Lea and Febiger; 1955. [Google Scholar]

2. Coleman JC, Broen WE., Jr . Abnormal psychology and modern life. 4th ed. London: Scott, Foresman and Co; 1972. pp. 267–403. [Google Scholar]

3. Faria MA., Jr . Vandals at the gates of medicine — Historic perspectives on the battle for health care reform. Macon, GA: Hacienda Publishing; 1995. pp. 3–11. [Google Scholar]

4. Faria MA., Jr Shooting rampages, mental health, and the sensationalization of violence. [Last accessed on 2013 Jan 29];Surg Neurol Int. 2013 4:16. Available from: http://www.surgicalneurologyint.com/text.asp?2013/4/1/16/106578 . [PMC free article] [PubMed] [Google Scholar]

5. Fessenden F, Glaberson W, Goodstein L. They threaten, seethe and unhinge, then kill in quantity. The New York Times. 2000 Apr 9; [Google Scholar]

6. Freeman W, Watts JW. Psychosurgery. 2nd ed. Springfield, Illinois: Charles C. Thomas; 1952. [Google Scholar]

Freeman W, Watts JW. Psychosurgery. 2nd ed. Springfield, Illinois: Charles C. Thomas; 1952. [Google Scholar]

7. Haggard HW. The Doctor in History. New York: Dorset Press; 1989. pp. 355–70. [Google Scholar]

8. Harlow JM. Recovery from the passage of iron bar through the head. Publ Mass Med Soc. 1868;2:327–47. [Google Scholar]

9. Kirkpatrick DB. The first primary brain-tumor operation. J Neurosurg. 1984;61:809–13. [PubMed] [Google Scholar]

10. Kucharski N. A History of frontal lobotomy in the United States, 1935-1955. Neurosurgery. 1984;14:765–72. [PubMed] [Google Scholar]

11. Ordia JI. Neurologic function seven years after crowbar impalement of the brain. Surg Neurol. 1989;32:152–5. [PubMed] [Google Scholar]

12. Osler W. The evolution of modern medicine. New Haven: Yale University Press; 1921. pp. 6–9. [Google Scholar]

13. PBS. The American Experience — The Lobotomist, Walter J. Freeman. Video-documentary, January 21, 2008. [Last accessed on 2013 Feb 27]. Available from: http://www.youtube.com/watch?v=_0aNILW6ILk .

Available from: http://www.youtube.com/watch?v=_0aNILW6ILk .

14. Rifkinson-Mann S. Cranial surgery in Peru. Neurosurgery. 1988;23:411–6. [PubMed] [Google Scholar]

15. Robison RA, Taghva A, Liu CY, Apuzzo ML. Surgery of the mind, mood and conscious state: an idea in evolution. World Neurosurg. 2012;77:662–86. [PubMed] [Google Scholar]

16. Stone JL. Paul Broca and the first craniotomy. J Neurosurg. 1991;75:154–9. [PubMed] [Google Scholar]

17. Torrey EF. Fifty years of failing America's mentally ill. — JFK's dream of replacing state mental hospitals with community mental health centers is now a hugely expensive nightmare. The Wall Street Journal. 2013. Feb 4, [Last accessed on 2013 Feb 4]. Available from: http://online.wsj.com/article/SB10001424127887323539804578260023200841756.html .

18. Walker AE, editor. A history of neurological surgery. New York: Hafner Publishing; 1967. pp. 1–22. [Google Scholar]

19. Walker AE, editor. A history of neurological surgery. New York: Hafner Publishing; 1967. pp. 272–85. [Google Scholar]

New York: Hafner Publishing; 1967. pp. 272–85. [Google Scholar]

Articles from Surgical Neurology International are provided here courtesy of Scientific Scholar

The History of Lobotomy | Psych Central

First introduced in the 1930s, this highly traumatic brain procedure was once seen as a miracle cure for mental illness.

Though rarely performed today, the lobotomy might be the most infamous psychiatric treatment in history.

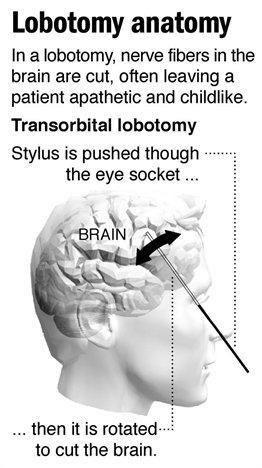

Lobotomy is a surgical procedure developed almost a century ago to treat severe mental health conditions. The procedure has varied throughout history but usually involves inserting a sharp instrument into the brain to sever certain neural connections.

Today, many people widely consider the procedure barbaric and unnecessary.

But experts once believed the lobotomy to be a miracle cure for mental health conditions like:

- treatment-resistant depression

- schizophrenia

- some personality disorders

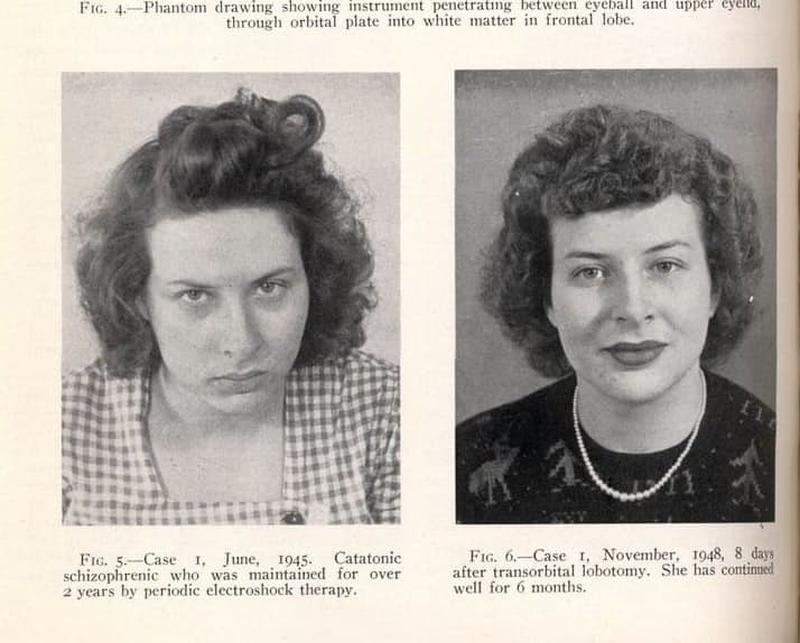

A lobotomy is certainly effective at altering behavior, and some patients seemed to improve after the procedure. But many also suffered severe and irreparable brain damage.

But many also suffered severe and irreparable brain damage.

Since its invention in the early 20th century, the story of lobotomy has been a fascinating and disturbing one.

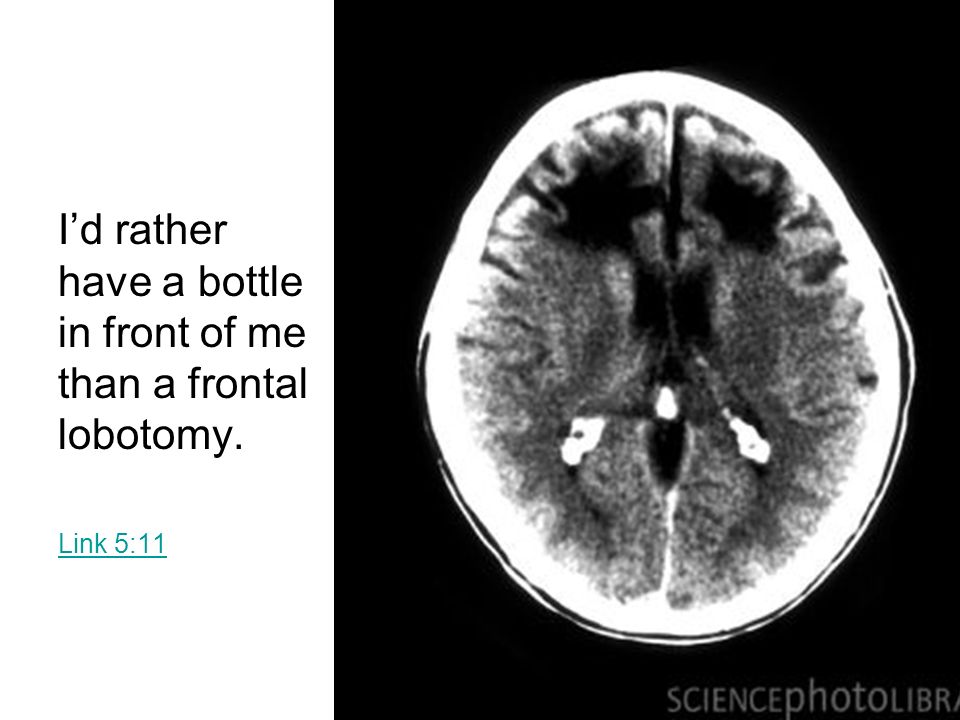

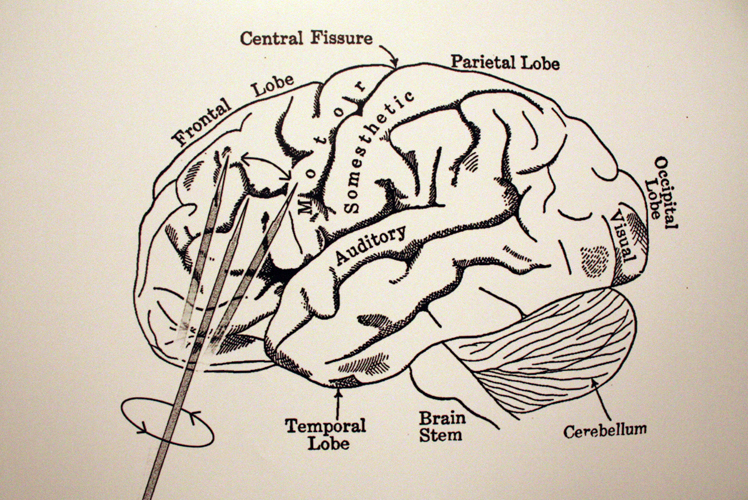

A lobotomy is psychosurgery, or brain surgery, designed to treat a psychological condition. It involves using a sharp surgical tool to sever the neural connections between the frontal lobe, which controls higher cognitive functions, like:

- memory

- emotions

- problem-solving skills

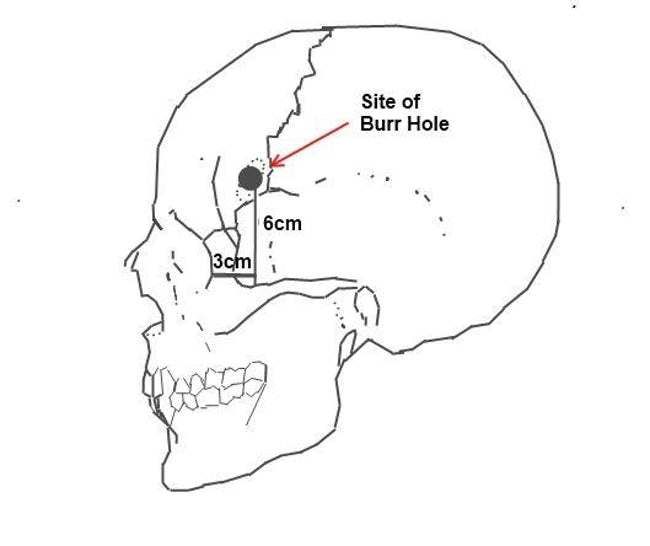

The earliest version of this procedure involved drilling holes in a patient’s head and injecting ethanol into their brain to destroy the nerve connections.

This was later refined into the prefrontal and transorbital lobotomy, which usually involves using an ice pick-like surgical instrument called a leucotome.

Prefrontal lobotomy

The surgeon drills holes in either the side of the top of the person’s skull and then uses a leucotome to manually sever the nerves between the frontal lobe and other regions of the brain.

Transorbital lobotomy

This procedure works the same way as a prefrontal lobotomy. But during a transorbital lobotomy, the surgeon accesses the person’s brain through their eye sockets.

In 1935, the Portuguese neurologist Egas Moniz invented the lobotomy and initially called it a “leucotomy.” He was inspired by the earlier work of Swiss psychiatrist Gottlieb Burckhardt who performed some of the earliest psychosurgeries during the 1880s.

In November of 1935, Moniz performed the procedure for the first time in a Lisbon hospital. He drilled holes in the person’s skull and injected pure alcohol into the frontal lobe to destroy the tissue and nerves.

In 1949, he received the Nobel Prize in medicine for inventing the procedure.

The year after Moniz’s invention, an American neurologist named Walter Jackson Freeman adopted the procedure and renamed it the lobotomy. He modified the surgery by introducing the use of a surgical tool instead of alcohol, creating the prefrontal lobotomy.

In September of 1936, Freeman and his neurosurgeon partner James Watts performed the first prefrontal lobotomy in the United States.

Later, in 1945, Freeman modified the procedure again and created the transorbital lobotomy, which he could perform quickly without leaving any scars.

The lobotomy was developed to treat severe mental health conditions and address the problem of overcrowding in psychiatric institutions during the 1930s.

Moniz thought that a physical malfunction in the brain caused symptoms like psychosis and mental health conditions such as depression. He believed he could cure them by severing the connection between the frontal lobe and other regions, forcing a kind of reset.

Moniz and Freeman both reported significant improvements in many of their patients. Although many showed no improvement — some even experiencing worse symptoms — the lobotomy still took off.

By the early 1940s, people touted the procedure as a miracle cure for mental health conditions, and experts adopted it as part of mainstream psychiatry.

What happens when someone is lobotomized?

The effects of a lobotomy are highly variable, which is one of the reasons it has become so controversial. The intention was to reduce agitation, anxiety, and excess emotion.

Some patients’ symptoms improved to the point where they could be discharged from the hospital. Others became more outspoken and experienced mood swings.

“These patients can be treated a good deal like children, with affectionate references to their irresponsible conduct. They harbor no grudges,” explained Freeman and Watts in a 1942 presentation at the New York Academy of Medicine.

But many people lost their ability to feel emotions and became apathetic, unengaged, and unable to concentrate. Some became catatonic, and a few even died after the procedure.

How common were lobotomies?

Lobotomies were widely used from the late 1930s through the early 1950s. According to one 2013 research paper, roughly 60,000 lobotomies were performed in the United States and Europe in the 2 decades after the procedure was invented.

But by the 1950s, the dangers and side effects of lobotomies were becoming widely known, drawing more scrutiny from doctors and the public.

Some high-profile incidents helped turn public opinion against lobotomies. For example, Freeman gave President John F. Kennedy’s sister Rosemary a lobotomy that left her permanently incapacitated.

Even James Watts, Freeman’s partner who helped him perform the first U.S. lobotomy, became disillusioned with the procedure by the 1950s. At the time, medications like antipsychotics and antidepressants also became widely available.

This made it easier to provide outpatient treatment for mental illness and treat symptoms without resorting to brain surgery.

Lobotomy has been banned in some places but is still performed on a limited basis in many countries. In 1950, the Soviet Union banned the use of lobotomies because it was “contrary to the principles of humanity.”

Other countries, including Japan and Germany, followed suit in later years.

In 1967, Freeman was banned from performing any further lobotomies after one of his patients suffered a fatal brain hemorrhage after the procedure. But the U.S., and much of western Europe, never banned lobotomy. And the procedure was still performed in these places throughout the 1980s.

Today, lobotomies are rarely performed, although they’re technically still legal.

Surgeons occasionally use a more refined type of psychosurgery called a cingulotomy in its place. The procedure involves targeting and altering specific areas of brain tissue.

Some surgeons may use a cingulotomy to treat obsessive-compulsive disorder (OCD) that hasn’t responded to other treatments. Doctors also sometimes use it to treat chronic pain.

Lobotomy is a controversial procedure that peaked in popularity during the 1940s. It was first performed in 1935 by Egas Moniz and then championed in the U.S. by Walter Jackson Freeman.

A lobotomy involves severing the nerve connections between the frontal lobe and other regions of the brain to reduce agitation, anxiety, and other symptoms of mental health conditions.

According to the scientists who pioneered the treatment, some patients improved after getting a lobotomy. But others developed apathy and a reduced ability to feel emotions. Some people became permanently incapacitated by the procedure, and in some cases, it was fatal.

The procedure eventually fell out of favor largely thanks to negative press and the growing availability of antidepressants and antipsychotics. Today, the procedure is banned in many countries and no longer performed in the United States.

10 facts about the terrible operation

History remembers many barbaric practices in medicine, but lobotomy is perhaps the most frightening. Today, this operation can only be seen in the cinema, but in the middle of the last century, you could well be forced to undergo a lobotomy for your wayward character.

1. Lobotomy, or leucotomy is an operation in which one of the lobes of the brain is separated from the rest of the areas, or completely excised. It was believed that this practice could treat schizophrenia.

It was believed that this practice could treat schizophrenia.

2. The method was developed by the Portuguese neurosurgeon Egas Moniz in 1935, and a trial lobotomy took place in 1936 under his supervision. After the first hundred operations, Moniz observed the patients and made a subjective conclusion about the success of his development: the patients calmed down and became surprisingly submissive.

ADVERTISING - CONTINUED BELOW

3. The results of the first 20 operations were as follows: 7 patients recovered, 7 patients showed improvement, and 6 people remained with the same illness. But the lobotomy continued to cause disapproval: many of Moniz's contemporaries wrote that the actual result of such an operation was the degradation of the personality.

4. The Nobel Committee considered the lobotomy a discovery that was ahead of its time. Egas Moniz received the Nobel Prize in Physiology and Medicine in 1949. Subsequently, the relatives of some patients requested that the award be canceled, since lobotomy causes irreparable harm to the patient's health and is generally a barbaric practice. But the request was rejected.

Subsequently, the relatives of some patients requested that the award be canceled, since lobotomy causes irreparable harm to the patient's health and is generally a barbaric practice. But the request was rejected.

5. If Egas Moniz argued that leucotomy is a last resort, then Dr. Walter Freeman considered lobotomy a remedy for all problems, including willfulness and aggressive character. He believed that lobotomy eliminates the emotional component and thereby "improves the behavior" of patients. It was Freeman who introduced the term "lobotomy" in 1945 year. Throughout his life, he operated on about 3,000 people . By the way, this doctor was not a surgeon.

6. Freeman once used an ice pick from his kitchen for an operation. Such a “necessity” arose because the previous instrument, the leukote, could not withstand the load and broke in the skull of patient .

7. Freeman later realized that the ice pick was great for the lobotomy. Therefore, the doctor designed a new medical instrument based on this model. The orbitoclast had a pointed end on one side and a handle on the other. A division was applied to the tip to control the depth of penetration.

Therefore, the doctor designed a new medical instrument based on this model. The orbitoclast had a pointed end on one side and a handle on the other. A division was applied to the tip to control the depth of penetration.

8. By the middle of the last century, lobotomy had become an unheard of popular procedure : it was practiced in Great Britain, Japan, the United States and many European countries. In the United States alone, about 5,000 operations were performed per year.

9. In the USSR, the new method of treatment was used relatively rarely, but it was improved. The Soviet neurosurgeon Boris Grigoryevich Egorov proposed the use of osteoplastic trepanation instead of access through the orbit. Egorov explained that trepanation would allow more precise orientation in determining the area of surgical intervention.

10. Lobotomy was practiced in the USSR for 5 years, but was banned at the end of 1950. It is generally accepted that the decision was dictated by ideological considerations , because this method is most widely used in the United States. By the way, in America, lobotomy continued to be practiced until the 70s. However, there is also an opposite point of view: the ban on lobotomy in the USSR was due to the lack of scientific data and the questionable method in general.

By the way, in America, lobotomy continued to be practiced until the 70s. However, there is also an opposite point of view: the ban on lobotomy in the USSR was due to the lack of scientific data and the questionable method in general.

Nothing better came to mind

Now lobotomy seems to be an attribute of horror films: a crazy villain doctor, armed with thin bayonets and a hammer, punches holes in the skulls of his victims, turning them into obedient zombies. More than 70 years ago, however, this operation was used with might and main in psychiatry: in some countries, lobotomy was prescribed for almost a mild form of anxiety disorder, and the Nobel Prize in Physiology or Medicine was even awarded for the development of the first method of its implementation. About why doctors needed to drill holes in the skulls of patients and whether it really helped, as well as how the Soviet lobotomy differed from the world one, we tell in our material.

William Faulkner was awarded the 1949 Nobel Prize in Literature for "significant and artistically unique contributions to the modern American novel." The prize in physics went to the Japanese Hideki Yukawa for predicting the existence of mesons, particles that carry the interaction between protons or neutrons, and in the field of chemistry, William Jioka was noted for his experiments with record-breaking low temperatures.

The Prize in Physiology or Medicine was shared by two scientists: the Swiss Walter Hess for describing the role of the diencephalon in regulating the functioning of internal organs, and the Portuguese António Egas Moniz for the method of surgical treatment of mental disorders that he developed a few years earlier - leucotomy, better known like a lobotomy.

By the time Moniz was presented with the award, the number of lobotomies performed around the world had exceeded several tens of thousands and was growing at a rapid pace: most of the operations were carried out in the United States, followed by the United Kingdom, followed by the Scandinavian countries.

In total, until the 1980s (the last time France banned this operation), about a hundred thousand lobotomies were performed in the world, and not all of them were without irreparable consequences.

Bur and ethanol

The fact that such a terrible surgical operation was noted by the Nobel Committee, now it may seem at least strange. However, it should be borne in mind that at the beginning of the 20th century, diagnostic psychiatry developed very rapidly: doctors found schizophrenia, depression, and anxiety disorders in their patients and did this in much the same way as modern psychiatrists do now.

But it was still far from the medical treatment and prevention of mental disorders: the first antidepressants and antipsychotics appeared on the market only in the middle of the century, and a very life-threatening substance was already recognized in the then popular panacea - opiates.

Psychotherapy, as well as the then popular psychoanalysis, often did not help, especially in severe cases, and there were a lot of patients in psychiatric clinics. They were treated mainly with shock therapy - but even that often did not bring relief.

They were treated mainly with shock therapy - but even that often did not bring relief.

In turn, doctors of the early 20th century considered surgery to be almost a universal method of treatment (at least for serious illnesses). Post-mortem autopsies conducted hundreds of years earlier had told enough about the human body to know what to cut and where, and the death rate from surgery had already declined.

Physicians were also sure that they were relatively well versed in the workings of the brain. So, Monish, developing the method of leucotomy, was inspired by the work of the American physiologist John Fulton.

In the 1930s, Fulton was studying the function and structure of the primate brain and, in one of his experiments, noted that surgical damage to the white matter fibers of the frontal lobes had a calming effect: one of his test subjects, the irascible and untrainable chimpanzee Becky, after surgery became calm and relaxed.

In principle, Fulton's ideas, borrowed by Moniz, are correct: the frontal lobes do take part in the cognitive control necessary for the normal functioning of the psyche, and their connection with other parts of the brain - those that lie a little deeper and are responsible for emotional cognition - is in development mental disorders play an important role.

The problem is that no one really understood this role then (now things are a little better, but still not perfect), but Moniz, inspired by experiments on chimpanzees, thought that success could be achieved on humans. Despite Fulton's skepticism about this, the future Nobel laureate performed his first lobotomy (or rather, prefrontal leukotomy) at 1935 year.

Moniz himself, who suffered from gout, did not take up the instruments: the operation was performed by his colleague, the neurosurgeon Almeida Lima. The first patient to survive a lobotomy was a 63-year-old woman who suffered from depression and anxiety.

Lima drilled a hole in the frontal part of the skull with a medical drill and filled the area separating the frontal lobes from the rest of the brain with ethyl alcohol: Moniz assumed that ethanol would create a barrier that would ensure the success of the procedure.

The operation was successful, and the doctors subjected seven more patients to the same procedure: the list of indications for it included, in addition to depression and anxiety disorder, schizophrenia and manic-depressive disorder (it is also bipolar disorder). After that, Moniz and Lima modified the procedure: instead of injecting ethanol into the brain, they introduced a cannula with a hook-tip into the frontal lobes, with which small parts of tissue were removed. The operation was performed on another 12 patients, and if the results did not satisfy them, Moniz and Lima could perform it again.

After that, Moniz and Lima modified the procedure: instead of injecting ethanol into the brain, they introduced a cannula with a hook-tip into the frontal lobes, with which small parts of tissue were removed. The operation was performed on another 12 patients, and if the results did not satisfy them, Moniz and Lima could perform it again.

In total, 20 patients were included in the "first wave" of lobotomy: 12 women and 8 men aged 22 to 67 years. In seven of them, Moniz noted significant improvements, in seven more insignificant ones, and the condition of six did not change at all.

At the same time, Moniz himself claimed, all the lobotomies performed by Lima did not have irreparable consequences: the patients remained capable, and among the side effects were only nausea, dizziness, slight incontinence and apathy.

Unlike Moniz, not all of his colleagues were optimistic about the results: the painfully manifested side effects resembled the symptoms of severe traumatic brain injuries.

In addition, some physiologists have noted a significant impact of the operation on the very personality of the patient. In their opinion, the ability of the operation to stop the symptoms of mental disorders seemed to be unimportant compared to the fact that the person simply ceased to be himself.

Despite this, after the "success" of Moniz and Lima, lobotomy began to quickly gain popularity among psychiatrists around the world, and medical companies even launched and advertised rather frightening-looking leukotomes - instruments for performing the operation.

Meanwhile in the USSR

Of course, Soviet psychiatrists did not deprive the attention of the popular operation in the West, but they approached the matter much more seriously and responsibly.

The Soviet neurosurgeon and academician Boris Grigoryevich Egorov became the main ideologist of lobotomy in the USSR. The justification for lobotomy in Soviet psychiatry was that the separation of the frontal lobes of the brain from the subcortical zones should weaken the influence of deep structures (for example, the thalamus or the amygdala, which are responsible for the primary regulation of behavior and emotions) on the prefrontal cortex of the brain, and vice versa.

Having rightly decided that it was not advisable to perform such an operation blindly, Egorov modified the method: in the Soviet Union, a lobotomy was performed with a craniotomy - on the open brain, which made the process much more meaningful than with Western colleagues. The indications for the operation were also rather limited: lobotomy was prescribed only for severe forms of schizophrenia in cases where no other treatment common then helped.

After the first successes (improvements were observed in 60 percent of those operated on, and 20 percent became fully capable at all), the operation began to be performed more often.

Soviet surgeons, however, rather quickly encountered the same problems as their Western counterparts: the operation, even with improved methods of its implementation, was still poorly substantiated theoretically, and also often led to irreparable consequences, such as complete or partial paralysis and other forms of disability.

In addition, lobotomy worked only for one form of schizophrenia, paranoid, and was not as effective in other cases, although it was still prescribed. As a result for 19In the 1940s, only a few hundred patients underwent lobotomy in the USSR.

As a result for 19In the 1940s, only a few hundred patients underwent lobotomy in the USSR.

Fortunately, the Soviet medical authorities recognized the danger of lobotomy very quickly: already in 1950, when the number of operations worldwide exceeded several tens of thousands per year, by decree of the Academic Medical Council of the USSR Ministry of Health, decree No. 1003 was signed, prohibiting frontal leucotomy.

President's sister

Despite the fact that Moniz received the Nobel Prize for the development of the lobotomy method, the name of another scientist, American Walter Freeman, is more famous in the sad story of this operation.

In December 1942, Freeman and his colleague, the neurosurgeon James Watts, published a detailed report on 136 lobotomies performed on psychiatric patients with schizophrenia, depression, mania, and schizotypal disorders.

Of all the patients on the operating table, three died, and eight more died shortly after the operation. In the vast majority - 98 people - Freeman noted significant improvements. He described in vivid colors how the new operation improved the lives of his patients, how they became happier and healthier, and also expressed gratitude to their savior.

In the vast majority - 98 people - Freeman noted significant improvements. He described in vivid colors how the new operation improved the lives of his patients, how they became happier and healthier, and also expressed gratitude to their savior.

However, not everything was so colorful: at the hands of Freeman and Watts, for example, perhaps the most famous victim of a lobotomy, the younger sister of the 35th US President John F. Kennedy, Rosemary, suffered.

By the age of 23, the girl spoke and read very poorly, was infantile and quick-tempered, because of which (basically, according to her father) she did not correspond to the other seven - extremely talented - brothers and sisters. In fact, Rosemary Kennedy had developmental delays - certainly limiting, but not canceling a normal daily life.

At her father's insistence, Rosemary underwent a lobotomy - even though there were no clear indications such as schizophrenia, anxiety disorder or depression (Freeman gave the latter to the girl retroactively - so that there would still be indications for the operation in prison).

Despite the fact that the procedure went according to plan (like any other brain surgery, the lobotomy was performed under local anesthesia, keeping the patient conscious and forcing him to talk), it ended extremely unsuccessfully. Rosemary Kennedy's intelligence level has declined even further (estimated to the level of a two-year-old child), in addition, she has forgotten how to walk and use her hands.

The older Kennedys placed Rosemary in a private psychiatric hospital, where they began to teach her to walk again. The patient's condition, however, did not improve much: Kennedy's eldest daughter spent the rest of her life in the clinic and died of natural causes at the age of 86.

Similar cases, however, did not stop Freeman: after the report published in 1942, he continued to perform lobotomies, and in 1948 he decided to improve and speed up the method of performing the operation - and in a rather radical and frightening way.

Ice pick

In 1948, Freeman, who, by the way, had practically no surgical training, began experimenting with deep lobotomy - he believed that for greater effectiveness it was necessary to penetrate further into the brain. The leukotomes used during the operation sometimes broke right in the patient’s head, so Freeman once used an ice pick: he plunged it first into one, then into the other eye socket of the patient at an angle so as to penetrate the frontal lobes.

The leukotomes used during the operation sometimes broke right in the patient’s head, so Freeman once used an ice pick: he plunged it first into one, then into the other eye socket of the patient at an angle so as to penetrate the frontal lobes.

So Freeman developed another lobotomy device, the orbitoclast. In fact, it was the same ice pick, inserted into the eye socket with hammer blows. Lobotomy using an orbitoclast did not require opening the skull with a drill and, in fact, was carried out almost completely blindly: only the angle of insertion of the instrument and the depth could be adjusted.

Of course, the orbitoclast made lobotomy much more dangerous, but at the same time more popular. Due to the fact that it became easier to perform the operation, it began to be prescribed and carried out much more often: from 1949 to 1952, five thousand frontal lobotomies were performed annually in the United States.

Freeman himself, of course, was at the head of the popularization of lobotomy. In 1950, he stopped working with James Watts, who was struck by the bloodthirstiness and danger of the "improved" method of lobotomy, and began to perform operations on his own, driving around the states in a "lobocar".

In 1950, he stopped working with James Watts, who was struck by the bloodthirstiness and danger of the "improved" method of lobotomy, and began to perform operations on his own, driving around the states in a "lobocar".

Freeman performed operations without gloves and a surgical mask, in almost any conditions. Absolutely confident in the effectiveness and safety of his method, he did not even pay attention to the frighteningly high mortality rate of his patients - 15 percent - and the fact that many became mentally and physically disabled after the operation.

Freeman's patients included 19 children, the youngest of whom was four years old at the time of the operation. The last time Freeman took an orbitoclast in his hands was in 1967: for his patient Helen Mortensen, it was the third and fatal lobotomy - the woman died of a cerebral hemorrhage during the procedure.

Freeman was removed from surgery and spent the last five years of his life after that, going around old patients and checking their condition.

Tape on operating table

We can say that lobotomy still had some potential. The work of the brain as a whole system is supported by a huge number of connections within it. These connections are responsible for the activity of the whole organism as a whole: for the recognition of visual images, the performance of automatic movements, the fine work of the hands, and so on.

They are also responsible for the formation of mental disorders, and in order to combat these disorders, it is precisely with the connections in the brain that it is necessary to work first of all.

The problem is that from the point of view of functional connections, the brain at the time of the rapid development of psychosurgery in general (and lobotomy in particular) was a black box. However, he still remains. The irreparable consequences of a lobotomy, such as paralysis, are not surprising - and inevitable even in cases where not an instrument that would be more suitable for a bartender than a doctor was used, but sterilized medical devices and the latest methods at that time.